Adapted from the original graphic found here.

Adapted from the original graphic found here.Every organization must perform different types of Security assessments on their networks, computers, and applications at least every so often. The primary purpose of most types of security assessments is to find and confirm vulnerabilities are present, so we can work to patch, mitigate, or remove them. There are different ways and methodologies to test how secure a computer system is. Some types of security assessments are more appropriate for certain networks than others. But they all serve a purpose in improving cybersecurity. All organizations have different compliance requirements and risk tolerance, face different threats, and have different business models that determine the types of systems they run externally and internally. Some organizations have a much more mature security posture than their peers and can focus on advanced red team simulations conducted by third parties, while others are still working to establish baseline security. Regardless, all organizations must stay on top of both legacy and recent vulnerabilities and have a system for detecting and mitigating risks to their systems and data.

Vulnerability assessments are appropriate for all organizations and networks. A vulnerability assessment is based on a particular security standard, and compliance with these standards is analyzed (e.g., going through a checklist).

A vulnerability assessment can be based on various security standards. Which standards apply to a particular network will depend on many factors. These factors can include industry-specific and regional data security regulations, the size and form of a company's network, which types of applications they use or develop, and their security maturity level.

Vulnerability assessments may be performed independently or alongside other security assessments depending on an organization's situation.

Here at Hack The Box, we love penetration tests, otherwise known as pentests. Our labs and many of our other Academy courses focus on pentesting.

They're called penetration tests because testers conduct them to determine if and how they can penetrate a network. A pentest is a type of simulated cyber attack, and pentesters conduct actions that a threat actor may perform to see if certain kinds of exploits are possible. The key difference between a pentest and an actual cyber attack is that the former is done with the full legal consent of the entity being pentested. Whether a pentester is an employee or a third-party contractor, they will need to sign a lengthy legal document with the target company that describes what they're allowed to do and what they're not allowed to do.

As with a vulnerability assessment, an effective pentest will result in a detailed report full of information that can be used to improve a network's security. All kinds of pentests can be performed according to an organization's specific needs.

Black box pentesting is done with no knowledge of a network's configuration or applications. Typically a tester will either be given network access (or an ethernet port and have to bypass Network Access Control NAC) and nothing else (requiring them to perform their own discovery for IP addresses) if the pentest is internal, or nothing more than the company name if the pentest is from an external standpoint. This type of pentesting is usually conducted by third parties from the perspective of an external attacker. Often the customer will ask the pentester to show them discovered internal/external IP addresses/network ranges so they can confirm ownership and note down any hosts that should be considered out-of-scope.

Grey box pentesting is done with a little bit of knowledge of the network they're testing, from a perspective equivalent to an employee who doesn't work in the IT department, such as a receptionist or customer service agent. The customer will typically give the tester in-scope network ranges or individual IP addresses in a grey box situation.

White box pentesting is typically conducted by giving the penetration tester full access to all systems, configurations, build documents, etc., and source code if web applications are in-scope. The goal here is to discover as many flaws as possible that would be difficult or impossible to discover blindly in a reasonable amount of time.

Often, pentesters specialize in a particular area. Penetration testers must have knowledge of many different technologies but still will usually have a specialty.

Application pentesters assess web applications, thick-client applications, APIs, and mobile applications. They will often be well-versed in source code review and able to assess a given web application from a black box or white box standpoint (typically a secure code review).

Network or infrastructure pentesters assess all aspects of a computer network, including its networking devices such as routers and firewalls, workstations, servers, and applications. These types of penetration testers typically must have a strong understanding of networking, Windows, Linux, Active Directory, and at least one scripting language. Network vulnerability scanners, such as Nessus, can be used alongside other tools during network pentesting, but network vulnerability scanning is only a part of a proper pentest. It's important to note that there are different types of pentests (evasive, non-evasive, hybrid evasive). A scanner such as Nessus would only be used during a non-evasive pentest whose goal is to find as many flaws in the network as possible. Also, vulnerability scanning would only be a small part of this type of penetration test. Vulnerability scanners are helpful but limited and cannot replace the human touch and other tools and techniques.

Physical pentesters try to leverage physical security weaknesses and breakdowns in processes to gain access to a facility such as a data center or office building.

Social engineering pentesters test human beings.

Pentesting is most appropriate for organizations with a medium or high security maturity level. Security maturity measures how well developed a company's cybersecurity program is, and security maturity takes years to build. It involves hiring knowledgeable cybersecurity professionals, having well-designed security policies and enforcement (such as configuration, patch, and vulnerability management), baseline hardening standards for all device types in the network, strong regulatory compliance, well-executed cyber incident response plans, a seasoned CSIRT (computer security incident response team), an established change control process, a CISO (chief information security officer), a CTO (chief technical officer), frequent security testing performed over the years, and strong security culture. Security culture is all about the attitude and habits employees have toward cybersecurity. Part of this can be taught through security awareness training programs and part by building security into the company's culture. Everyone, from secretaries to sysadmins to C-level staff, should be security conscious, understand how to avoid risky practices, and be educated on recognizing suspicious activity that should be reported to security staff.

Organizations with a lower security maturity level may want to focus on vulnerability assessments because a pentest could find too many vulnerabilities to be useful and could overwhelm staff tasked with remediation. Before penetration testing is considered, there should be a track record of vulnerability assessments and actions taken in response to vulnerability assessments.

Vulnerability Assessments and Penetration Tests are two completely different assessments. Vulnerability assessments look for vulnerabilities in networks without simulating cyber attacks. All companies should perform vulnerability assessments every so often. A wide variety of security standards could be used for a vulnerability assessment, such as GDPR compliance or OWASP web application security standards. A vulnerability assessment goes through a checklist.

During a vulnerability assessment, the assessor will typically run a vulnerability scan and then perform validation on critical, high, and medium-risk vulnerabilities. This means that they will show evidence that the vulnerability exists and is not a false positive, often using other tools, but will not seek to perform privilege escalation, lateral movement, post-exploitation, etc., if they validate, for example, a remote code execution vulnerability.

Penetration tests, depending on their type, evaluate the security of different assets and the impact of the issues present in the environment. Penetration tests can include manual and automated tactics to assess an organization's security posture. They also often give a better idea of how secure a company's assets are from a testing perspective. A pentest is a simulated cyber attack to see if and how the network can be penetrated. Regardless of a company's size, industry, or network design, pentests should only be performed after some vulnerability assessments have been conducted successfully and with fixes. A business can do vulnerability assessments and pentests in the same year. They can complement each other. But they are very different sorts of security tests used in different situations, and one isn't "better" than the other.

Adapted from the original graphic found here.

Adapted from the original graphic found here.

An organization may benefit more from a vulnerability assessment over a penetration test if they want to receive a view of commonly known issues monthly or quarterly from a third-party vendor. However, an organization would benefit more from a penetration test if they are looking for an approach that utilizes manual and automated techniques to identify issues outside of what a vulnerability scanner would identify during a vulnerability assessment. A penetration test could also illustrate a real-life attack chain that an attacker could utilize to access an organization's environment. Individuals performing penetration tests have specialized expertise in network testing, wireless testing, social engineering, web applications, and other areas.

For organizations that receive penetration testing assessments on an annual or semi-annual basis, it is still crucial for those organizations to regularly evaluate their environment with internal vulnerability scans to identify new vulnerabilities as they are released to the public from vendors.

Vulnerability assessments and penetration tests are not the only types of security assessments that an organization can perform to protect its assets. Other types of assessments may also be necessary, depending on the type of the organization.

Security Audits

Vulnerability assessments are performed because an organization chooses to conduct them, and they can control how and when they're assessed. Security audits are different. Security audits are typically requirements from outside the organization, and they're typically mandated by government agencies or industry associations to assure that an organization is compliant with specific security regulations.

For example, all online and offline retailers, restaurants, and service providers who accept major credit cards (Visa, MasterCard, AMEX, etc.) must comply with the PCI-DSS "Payment Card Industry Data Security Standard". PCI DSS is a regulation enforced by the Payment Card Industry Security Standards Council, an organization run by credit card companies and financial service industry entities. A company that accepts credit and debit card payments may be audited for PCI DSS compliance, and noncompliance could result in fines and not being allowed to accept those payment methods anymore.

Regardless of which regulations an organization may be audited for, it's their responsibility to perform vulnerability assessments to assure that they're compliant before they're subject to a surprise security audit.

Bug Bounties

Bug bounty programs are implemented by all kinds of organizations. They invite members of the general public, with some restrictions (usually no automated scanning), to find security vulnerabilities in their applications. Bug bounty hunters can be paid anywhere from a few hundred dollars to hundreds of thousands of dollars for their findings, which is a small price to pay for a company to avoid a critical remote code execution vulnerability from falling into the wrong hands.

Larger companies with large customer bases and high security maturity are appropriate for bug bounty programs. They need to have a team dedicated to triaging and analyzing bug reports and be in a situation where they can endure outsiders looking for vulnerabilities in their products.

Companies like Microsoft and Apple are ideal for having bug bounty programs because of their millions of customers and robust security maturity.

Red Team Assessment

Companies with larger budgets and more resources can hire their own dedicated red teams or use the services of third-party consulting firms to perform red team assessments. A red team consists of offensive security professionals who have considerable experience with penetration testing. A red team plays a vital role in an organization's security posture.

A red team is a type of evasive black box pentesting, simulating all kinds of cyber attacks from the perspective of an external threat actor. These assessments typically have an end goal (i.e., reaching a critical server or database, etc.). The assessors only report the vulnerabilities that led to the completion of the goal, not as many vulnerabilities as possible as with a penetration test.

If a company has its own internal red team, its job is to perform more targeted penetration tests with an insider's knowledge of its network. A red team should constantly be engaged in red teaming campaigns. Campaigns could be based on new cyber exploits discovered through the actions of advanced persistent threat groups (APTs), for example. Other campaigns could target specific types of vulnerabilities to explore them in great detail once an organization has been made aware of them.

Ideally, if a company can afford it and has been building up its security maturity, it should conduct regular vulnerability assessments on its own, contract third parties to perform penetration tests or red team assessments, and, if appropriate, build an internal red team to perform grey and white box pentesting with more specific parameters and scopes.

Purple Team Assessment

A blue team consists of defensive security specialists. These are often people who work in a SOC (security operations center) or a CSIRT (computer security incident response team). Often, they have experience with digital forensics too. So if blue teams are defensive and red teams are offensive, red mixed with blue is purple.

What's a purple team?

Purple teams are formed when offensive and defensive security specialists work together with a common goal, to improve the security of their network. Red teams find security problems, and blue teams learn about those problems from their red teams and work to fix them. A purple team assessment is like a red team assessment, but the blue team is also involved at every step. The blue team may even play a role in designing campaigns. "We need to improve our PCI DSS compliance. So let's watch the red team pentest our point-of-sale systems and provide active input and feedback during their work."

Now that we've gone through the key assessment types that an organization can undergo let's walk through vulnerability assessments more in-depth to better understand key terms and a sample methodology.

A Vulnerability Assessment aims to identify and categorize risks for security weaknesses related to assets within an environment. It is important to note that there is little to no manual exploitation during a vulnerability assessment. A vulnerability assessment also provides remediation steps to fix the issues.

The purpose of a Vulnerability Assessment is to understand, identify, and categorize the risk for the more apparent issues present in an environment without actually exploiting them to gain further access. Depending on the scope of the assessment, some customers may ask us to validate as many vulnerabilities as possible by performing minimally invasive exploitation to confirm the scanner findings and rule out false positives. Other customers will ask for a report of all findings identified by the scanner. As with any assessment, it is essential to clarify the scope and intent of the vulnerability assessment before starting. Vulnerability management is vital to help organizations identify the weak points in their assets, understand the risk level, and calculate and prioritize remediation efforts.

It is also important to note that organizations should always test substantial patches before pushing them out into their environment to prevent disruptions.

Below is a sample vulnerability assessment methodology that most organizations could follow and find success with. Methodologies may vary slightly from organization to organization, but this chart covers the main steps, from identifying assets to creating a remediation plan.  Adapted from the original graphic found here.

Adapted from the original graphic found here.

Before we go any further, let's identify some key terms that any IT or Infosec professional should understand and be able to explain clearly.

Vulnerability

A Vulnerability is a weakness or bug in an organization's environment, including applications, networks, and infrastructure, that opens up the possibility of threats from external actors. Vulnerabilities can be registered through MITRE's Common Vulnerability Exposure database and receive a Common Vulnerability Scoring System (CVSS) score to determine severity. This scoring system is frequently used as a standard for companies and governments looking to calculate accurate and consistent severity scores for their systems' vulnerabilities. Scoring vulnerabilities in this way helps prioritize resources and determine how to respond to a given threat. Scores are calculated using metrics such as the type of attack vector (network, adjacent, local, physical), the attack complexity, privileges required, whether or not the attack requires user interaction, and the impact of successful exploitation on an organization's confidentiality, integrity, and availability of data. Scores can range from 0 to 10, depending on these metrics.

For example, SQL injection is considered a vulnerability since an attacker could leverage queries to extract data from an organization's database. This attack would have a higher CVSS score rating if it could be performed without authentication over the internet than if an attacker needed authenticated access to the internal network and separate authentication to the target application. These types of things must be considered for all vulnerabilities we encounter.

Threat

A Threat is a process that amplifies the potential of an adverse event, such as a threat actor exploiting a vulnerability. Some vulnerabilities raise more threat concerns over others due to the probability of the vulnerability being exploited. For example, the higher the reward of the outcome and ease of exploitation, the more likely the issue would be exploited by threat actors.

Exploit

An Exploit is any code or resources that can be used to take advantage of an asset's weakness. Many exploits are available through open-source platforms such as Exploit-db or the Rapid7 Vulnerability and Exploit Database. We will often see exploit code hosted on sites such as GitHub and GitLab as well.

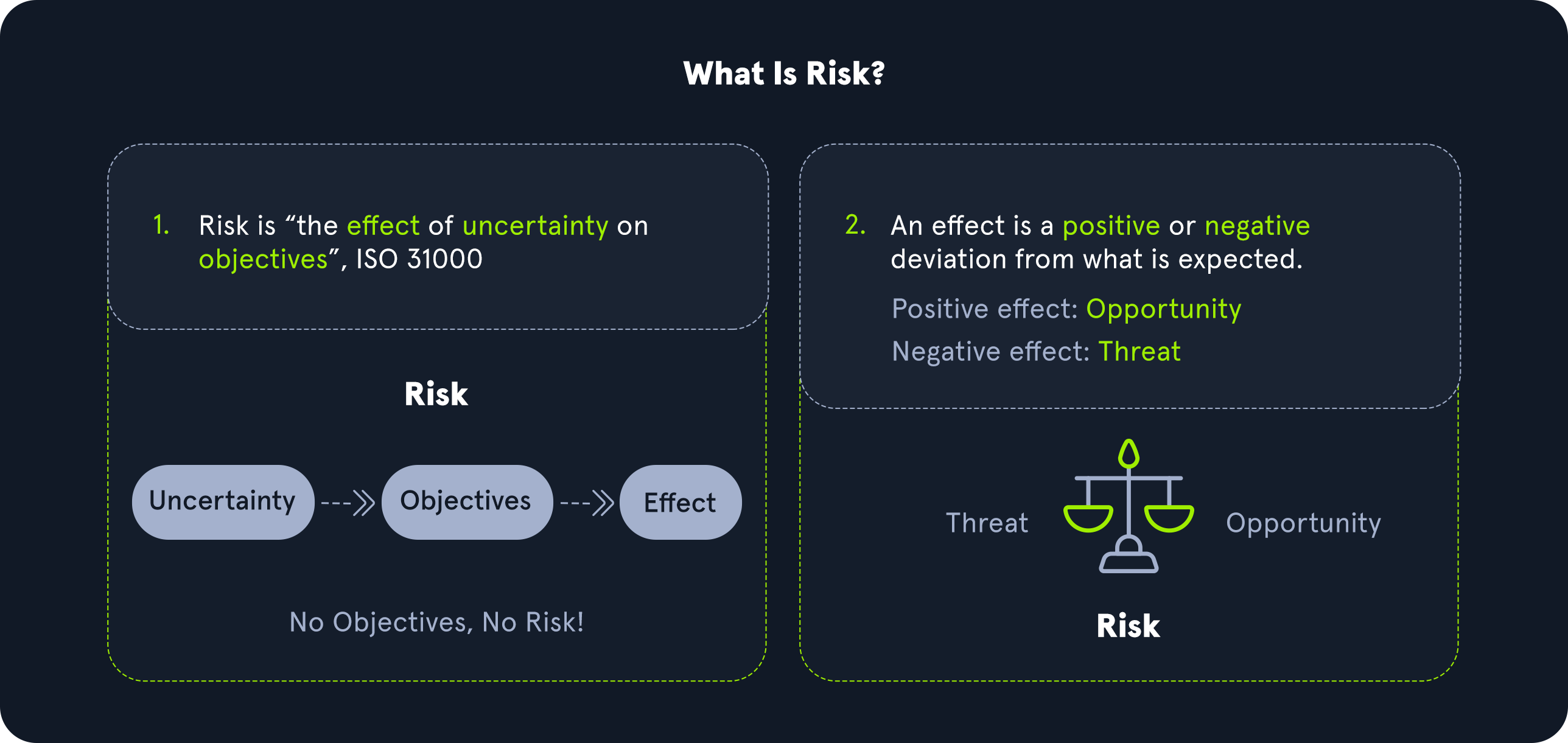

Risk

Risk is the possibility of assets or data being harmed or destroyed by threat actors.

To differentiate the three, we can think of it as follows:

Risk: something bad that could happenThreat: something bad that is happeningVulnerabilities: weaknesses that could lead to a threatVulnerabilities, Threats, and Exploits all play a part in measuring the level of risk in weaknesses by determining the likelihood and impact. For example, vulnerabilities that have reliable exploit code and are likely to be used to gain access to an organization's network would significantly raise the risk of an issue due to the impact. If an attacker had access to the internal network, they could potentially view, edit, or delete sensitive documents crucial for business operations. We can use a qualitative risk matrix to measure risk based on likelihood and impact with the table shown below.

In this example, we can see that a vulnerability with a low likelihood of occurring and low impact would be the lowest risk level, while a vulnerability with a high likelihood of being exploited and the highest impact on an organization would represent the highest risk and would want to be prioritized for remediation.

When an organization of any kind, in any industry, and of any size needs to plan their cybersecurity strategy, they should start by creating an inventory of their data assets. If you want to protect something, you must first know what you are protecting! Once assets have been inventoried, then you can start the process of asset management. This is a key concept in defensive security.

Asset Inventory

Asset inventory is a critical component of vulnerability management. An organization needs to understand what assets are in its network to provide the proper protection and set up appropriate defenses. The asset inventory should include information technology, operational technology, physical, software, mobile, and development assets. Organizations can utilize asset management tools to keep track of assets. The assets should have data classifications to ensure adequate security and access controls.

Application and System Inventory

An organization should create a thorough and complete inventory of data assets for proper asset management for defensive security. Data assets include:

AWS), Google Cloud Platform (GCP), and Microsoft Azure are some of the most popular cloud providers, but there are many more. Sometimes corporate networks are "multi-cloud," meaning they have more than one cloud provider. A company's cloud provider will provide tools that can be used to inventory all of the data stored by that particular cloud provider.Software-as-a-Service (SaaS) applications. This data is also "in the cloud" but might not all be within the scope of a corporate cloud provider account. These are often consumer services or the "business" version of those services. Think of online services such as Google Drive, Dropbox, Microsoft Teams, Apple iCloud, Adobe Creative Suite, Microsoft Office 365, Google Docs, and the list goes on.routers, firewalls, hubs, switches, dedicated intrusion detection and prevention systems (IDS/IPS), data loss prevention (DLP) systems, and so on.All of these assets are very important. A threat actor or any other sort of risk to any of these assets can do significant damage to a company's information security and ability to operate day by day. An organization needs to take its time to assess everything and be careful not to miss a single data asset, or they won't be able to protect it.

Organizations frequently add or remove computers, data storage, cloud server capacity, or other data assets. Whenever data assets are added or removed, this must be thoroughly noted in the data asset inventory.

Next, we'll discuss some key standards that organizations may be subject to or choose to follow to standardize their approach to risk and vulnerability management.

Both penetration tests and vulnerability assessments should comply with specific standards to be accredited and accepted by governments and legal authorities. Such standards help ensure that the assessment is carried out thoroughly in a generally agreed-upon manner to increase the efficiency of these assessments and reduce the likelihood of an attack on the organization.

Each regulatory compliance body has its own information security standards that organizations must adhere to maintain their accreditation. The big compliance players in information security are PCI, HIPAA, FISMA, and ISO 27001.

These accreditations are necessary because it certifies that an organization has had a third-party vendor evaluate its environment. Organizations also rely on these accreditations for business operations since some companies won't do business without specific accreditations from organizations.

Payment Card Industry Data Security Standard (PCI DSS)

The Payment Card Industry Data Security Standard (PCI DSS) is a commonly known standard in information security that implements requirements for organizations that handle credit cards. While not a government regulation, organizations that store, process, or transmit cardholder data must still implement PCI DSS guidelines. This would include banks or online stores that handle their own payment solutions (e.g., Amazon).

PCI DSS requirements include internal and external scanning of assets. For example, any credit card data that is being processed or transmitted must be done in a Cardholder Data Environment (CDE). The CDE environment must be adequately segmented from normal assets. CDE environments are segmented off from an organization's regular environment to protect any cardholder data from being compromised during an attack and limit internal access to data.

Health Insurance Portability and Accountability Act (HIPAA)

HIPAA is the Health Insurance Portability and Accountability Act, which is used to protect patients' data. HIPAA does not necessarily require vulnerability scans or assessments; however, a risk assessment and vulnerability identification are required to maintain HIPAA accreditation.

The Federal Information Security Management Act (FISMA) is a set of standards and guidelines used to safeguard government operations and information. The act requires an organization to provide documentation and proof of a vulnerability management program to maintain information technology systems' proper availability, confidentiality, and integrity.

ISO 27001

ISO 27001 is a standard used worldwide to manage information security. ISO 27001 requires organizations to perform quarterly external and internal scans.

Although compliance is essential, it should not drive a vulnerability management program. Vulnerability management should consider the uniqueness of an environment and the associated risk appetite to an organization.

The International Organization for Standardization (ISO) maintains technical standards for pretty much anything you can imagine. The ISO 27001 standard deals with information security. ISO 27001 compliance depends upon maintaining an effective Information Security Management System. To ensure compliance, organizations must perform penetration tests in a carefully designed way.

Penetration tests should not be performed without any rules or guidelines. There must always be a specifically defined scope for a pentest, and the owner of a network must have a signed legal contract with pentesters outlining what they're allowed to do and what they're not allowed to do. Pentesting should also be conducted in such a way that minimal harm is done to a company's computers and networks. Penetration testers should avoid making changes wherever possible (such as changing an account password) and limit the amount of data removed from a client's network. For example, instead of removing sensitive documents from a file share, a screenshot of the folder names should suffice to prove the risk.

In addition to scope and legalities, there are also various pentesting standards, depending on what kind of computer system is being assessed. Here are some of the more common standards you may use as a pentester.

PTES

The Penetration Testing Execution Standard (PTES) can be applied to all types of penetration tests. It outlines the phases of a penetration test and how they should be conducted. These are the sections in the PTES:

OSSTMM

OSSTMM is the Open Source Security Testing Methodology Manual, another set of guidelines pentesters can use to ensure they're doing their jobs properly. It can be used alongside other pentest standards.

OSSTMM is divided into five different channels for five different areas of pentesting:

NIST

The NIST (National Institute of Standards and Technology) is well known for their NIST Cybersecurity Framework, a system for designing incident response policies and procedures. NIST also has a Penetration Testing Framework. The phases of the NIST framework include:

OWASP

OWASP stands for the Open Web Application Security Project. They're typically the go-to organization for defining testing standards and classifying risks to web applications.

OWASP maintains a few different standards and helpful guides for assessment various technologies:

There are various ways to score or calculate severity ratings of vulnerabilities. The Common Vulnerability Scoring System (CVSS) is an industry standard for performing these calculations. Many scanning tools will apply these scores to each finding as a part of the scan results, but it's important that we understand how these scores are derived in case we ever need to calculate one by hand or justify the score applied to a given vulnerability. The CVSS is often used together with the so-called Microsoft DREAD). DREAD is a risk assessment system developed by Microsoft to help IT security professionals evaluate the severity of security threats and vulnerabilities. It is used to perform a risk analysis by using a scale of 10 points to assess the severity of security threats and vulnerabilities. With this, we calculate the risk of a threat or vulnerability based on five main factors:

The model is essential to Microsoft's security strategy and is used to monitor, assess and respond to security threats and vulnerabilities in Microsoft products. It also serves as a reference for IT security professionals and managers to perform their risk assessment and prioritization of security threats and vulnerabilities.

The CVSS system helps categorize the risk associated with an issue and allows organizations to prioritize issues based on the rating. The CVSS scoring consists of the exploitability and impact of an issue. The exploitability measurements consist of access vector, access complexity, and authentication. The impact metrics consist of the CIA triad, including confidentiality, integrity, and availability.

Adapted from the original graphic found here.

Adapted from the original graphic found here.

The CVSS base metric group represents the vulnerability characteristics and consists of exploitability metrics and impact metrics.

Exploitability Metrics

The Exploitability metrics are a way to evaluate the technical means needed to exploit the issue using the metrics below:

Impact Metrics

The Impact metrics represent the repercussions of successfully exploiting an issue and what is impacted in an environment, and it is based on the CIA triad. The CIA triad is an acronym for Confidentiality, Integrity, and Availability.

Confidentiality Impact relates to securing information and ensuring only authorized individuals have access. For example, a high severity value would be in the case of an attacker stealing passwords or encryption keys. A low severity value would relate to an attacker taking information that may not be a vital asset to an organization.

Integrity Impact relates to information not being changed or tampered with to maintain accuracy. For example, a high severity would be if an attacker modified crucial business files in an organization's environment. A low severity value would be if an attacker could not specifically control the number of changed or modified files.

Availability Impact relates to having information readily attainable for business requirements. For example, a high value would be if an attacker caused an environment to be completely unavailable for business. A low value would be if an attacker could not entirely deny access to business assets and users could still access some organization assets.

The Temporal Metric Group details the availability of exploits or patches regarding the issue.

Exploit Code Maturity

The Exploit Code Maturity metric represents the probability of an issue being exploited based on ease of exploitation techniques. There are various metric values associated with this metric, including Not Defined, High, Functional, Proof-of-Concept, and Unproven.

A 'Not Defined' value relates to skipping this particular metric. A 'High' value represents an exploit consistently working for the issue and is easily identifiable with automated tools. A Functional value indicates there is exploit code available to the public. A Proof-of-Concept demonstrates that a PoC exploit code is available but would require changes for an attacker to exploit the issue successfully.

Remediation Level

The Remediation level is used to identify the prioritization of a vulnerability. The metric values associated with this metric include Not Defined, Unavailable, Workaround, Temporary Fix, and Official Fix.

A 'Not Defined' value relates to skipping this particular metric. An 'Unavailable' value indicates there is no patch available for the vulnerability. A 'Workaround' value indicates an unofficial solution released until an official patch by the vendor. A 'Temporary Fix' means an official vendor has provided a temporary solution but has not released a patch yet for the issue. An 'Official Fix' indicates a vendor has released an official patch for the issue for the public.

Report Confidence

Report Confidence represents the validation of the vulnerability and how accurate the technical details of the issue are. The metric values associated with this metric include Not Defined, Confirmed, Reasonable, and Unknown.

A 'Not Defined' value relates to skipping this particular metric. A 'Confirmed' value indicates there are various sources with detailed information confirming the vulnerability. A 'Reasonable' value indicates sources have published information about the vulnerability. However, there is no complete confidence that someone would achieve the same result due to missing details of reproducing the exploit for the issue.

The Environmental metric group represents the significance of the vulnerability of an organization, taking into account the CIA triad.

Modified Base Metrics

The Modified Base metrics represent the metrics that can be altered if the affected organization deems a more significant risk in Confidentiality, Integrity, and Availability to their organization. The values associated with this metric are Not Defined, High, Medium, and Low.

A 'Not Defined' value would indicate skipping this metric. A 'High' value would mean one of the elements of the CIA triad would have astronomical effects on the overall organization and customers. A 'Medium' value would indicate one of the elements of the CIA triad would have significant effects on the overall organization and customers. A 'Low' value would mean one of the elements of the CIA triad would have minimal effects on the overall organization and customers.

The calculation of a CVSS v3.1 score takes into account all the metrics discussed in this section. The National Vulnerability Database has a calculator available to the public here.

CVSS Calculation Example

For example, for the Windows Print Spooler Remote Code Execution Vulnerability, CVSS Base Metrics is 8.8. You can reference the values of each metric value here.

Next, we'll discuss how vulnerabilities are classified in a standard way that scanning tools can use to include an external reference to the particular vulnerability.

Open Vulnerability Assessment Language (OVAL) is a publicly available information security international standard used to evaluate and detail the system's current state and issues. OVAL is also co-supported by the office of Cybersecurity and Communications from the U.S. Department of Homeland Security. OVAL provides a language to understand encoding system attributes and various content repositories shared within the security community. The OVAL repository has over 7000+ definitions for public use. Additionally, OVAL is also used by the U.S. National Institute of Standards and Technology's (NIST) Security Content Automation Protocol (SCAP) which brings together community ideas for automating vulnerability management, measurement, and ensuring systems meet policy compliance.

OVAL Process

Adapted from the original graphic found here.

Adapted from the original graphic found here.

The goal of the OVAL language is to have a three-step structure during the assessment process that consists of:

The information can be described in various types of states, including: Vulnerable, Non-compliant, Installed Asset, and Patched.

OVAL Definitions

The OVAL definitions are recorded in an XML format to discover any software vulnerabilities, misconfigurations, programs, and additional system information taking out the need to exploit a system. By having the ability to identify issues without directly exploiting the issue, an organization can correlate which systems need to be patched in a network.

The four main classes of OVAL definitions consist of:

OVAL Vulnerability Definitions: Identifies system vulnerabilitiesOVAL Compliance Definitions: Identifies if current system configurations meet system policy requirementsOVAL Inventory Definitions: Evaluates a system to see if a specific software is presentOVAL Patch Definitions: Identifies if a system has the appropriate patchAdditionally, the OVAL ID Format consist of a unique format that consists of "oval:Organization Domain Name:ID Type:ID Value". The ID Type can fall into various categories including: definition (def), object (obj), state (ste), and variable (var). An example of a unique identifier would be oval:org.mitre.oval:obj:1116.

Scanners such as Nessus have the ability to use OVAL to configure security compliance scanning templates.

Common Vulnerabilities and Exposures (CVE) is a publicly available catalog of security issues sponsored by the United States Department of Homeland Security (DHS). Each security issue has a unique CVE ID number assigned by the CVE Numbering Authority (CNA). The purpose of creating a unique CVE ID number is to create a standardization for a vulnerability or exposure as a researcher identifies it. A CVE consists of critical information regarding a vulnerability or exposure, including a description and references about the issue. The information in a CVE allows an organization's IT team to understand how detrimental a problem could be to their environment.

The following chart explains how a CVE ID may be assigned to a vulnerability. Any vulnerabilities assigned a CVE must be independently fixable, affect just one codebase, and be acknowledged and documented by the relevant vendor.

Adapted from the original graphic here.

Adapted from the original graphic here.

Stage 1: Identify if CVE is Required and Relevant

Identify if the issue found is a vulnerability. According to the CVE Team, "A vulnerability in the context of the CVE Program is indicated by code that can be exploited, resulting in a negative impact to confidentiality, integrity, OR availability, and that requires a coding change, specification change, or specification deprecation to mitigate or address." Additionally, research should verify there is not a CVE ID already in the CVE database.

Stage 2: Reach Out to Affected Product Vendor

A researcher should ensure they have made a good faith effort to contact a vendor directly. Researchers can reference CVE's Documents on Disclosure Practices for additional information.

Stage 3: Identify if Request Should Be For Vendor CNA or Third Party CNA

If a company is a part of participating CNA's, they can assign a CVE ID for one of their products. If the issue is for a participating CNA, researchers can contact the appropriate CNA organization here. If the vendor is not a participating CNA, a researcher should attempt to reach out to the vendor's third-party coordinator.

Stage 4: Requesting CVE ID Through CVE Web Form

The CVE Team has a form that can be filled out online here if the methods above do not work for CVE requests.

Stage 5: Confirmation of CVE Form

Upon submitting the CVE Web Form mentioned in Stage 4, an individual will receive a confirmation email. The CVE team will contact the requestor if any additional information is required.

Stage 6: Receival of CVE ID

Upon approval, the CVE Team will notify the requestor of a CVE ID if the affected product's vulnerability is confirmed. Please note that the CVE ID is not public yet at this stage.

Stage 7: Public Disclosure of CVE ID

CVE IDs can be announced to the public as soon as appropriate vendors and parties are aware of the issue to prevent duplication of CVE IDs. This stage ensures that all associated parties are aware of the problem before being publicly disclosed.

Stage 8: Announcing the CVE

The CVE Team asks researchers who are sharing multiple CVEs to ensure each CVE indicates the different vulnerabilities. Additional information can be found here.

Stage 9: Providing Information to The CVE Team

At this stage, the CVE Team asks that the researcher help provide additional information to be used in the official CVE listing on the website. The U.S. National Vulnerability Database (NVD) maintains this information online in their database as well.

Security researchers and consultants constantly reference the CVE database since it consists of thousands of vulnerabilities that could be leveraged for exploitation. In addition, there are also times when individuals may come across an issue they have never seen in the wild or it has never disclosed while digging into a specific software or program.

Responsible disclosure is essential in the security community because it allows an organization or researcher to work directly with a vendor providing them with the issue details first to ensure a patch is available before the vulnerability announcement to the world. If an issue is not responsibly disclosed to a vendor, real threat actors may be able to leverage the issues for criminal use, also referred to as a zero day or an 0-day.

CVE-2020-5902

CVE-2020-5902 is an unauthenticated, remote code execution vulnerability in the BIG-IP Traffic Management User Interface (TMUI). The issue is exploitable when TMUI is available through the BIG-IP management port and leads to a complete system takeover since an attacker could execute code, edit files, and enable or disable services on the remote host.

CVE-2021-34527

CVE-2021-34527, also known as PrintNightmare, is a remote code execution vulnerability within the Windows Print Spooler service. The Windows Print Spooler service can be abused due to the service improperly handling privileges file operations. The issue requires a user to be authenticated but allows complete takeover of a system from remote or local code execution. The issue is extremely dangerous since it allows an attacker to fully control a domain since it exploits servers (including domain controllers) and workstations.

Now that we've defined key terms, discussed assessment types, vulnerability scoring, and disclosure, let's move on to getting familiar with two popular vulnerability scanning tools: Nessus and OpenVAS.

As discussed earlier, vulnerability scanning is performed to identify potential vulnerabilities in network devices such as routers, firewalls, switches, as well as servers, workstations, and applications. Scanning is automated and focuses on finding potential/known vulnerabilities on the network or at the application level. Vulnerabilities scanners typically do not exploit vulnerabilities (with some exceptions) but need a human to manually validate scan issues to determine whether or not a particular scan returned real issues that need to be fixed or false positives that can be ignored and excluded from future scans against the same target.

Vulnerability scanning is often part of a standard penetration test, but the two are not the same. A vulnerability scan can help gain additional coverage during a penetration test or speed up the project's testing under time constraints. An actual penetration test includes much more than just a scan.

The type of scans run varies from one tool to another, but most tools run a combination of dynamic and static tests, depending on the target and the vulnerability. A static test would determine a vulnerability if the identified version of a particular asset has a public CVE. However, this is not always accurate as a patch may have been applied, or the target isn't specifically vulnerable to that CVE. On the other hand, a dynamic test tries specific (usually benign) payloads such as weak credentials, SQL injection, or command injection on the target (i.e., a web application). If any payload returns a hit, then there's a good chance that it is vulnerable.

Organizations should run both unauthenticated and authenticated scans on a continuous schedule to ensure that assets are patched as new vulnerabilities are discovered and that any new assets added to the network do not have missing patches or other configuration/patching issues. Vulnerability scanning should feed into an organization's patch management) program.

Nessus, Nexpose, and Qualys are well-known vulnerability scanning platforms that also provide free community editions. There are also open-source alternatives such as OpenVAS.

Nessus Essentials by Tenable is the free version of the official Nessus Vulnerability Scanner. Individuals can access Nessus Essentials to get started understanding Tenable's vulnerability scanner. The caveat is that it can only be used for up to 16 hosts. The features in the free version are limited but are perfect for someone looking to get started with Nessus. The free scanner will attempt to identify vulnerabilities in an environment.

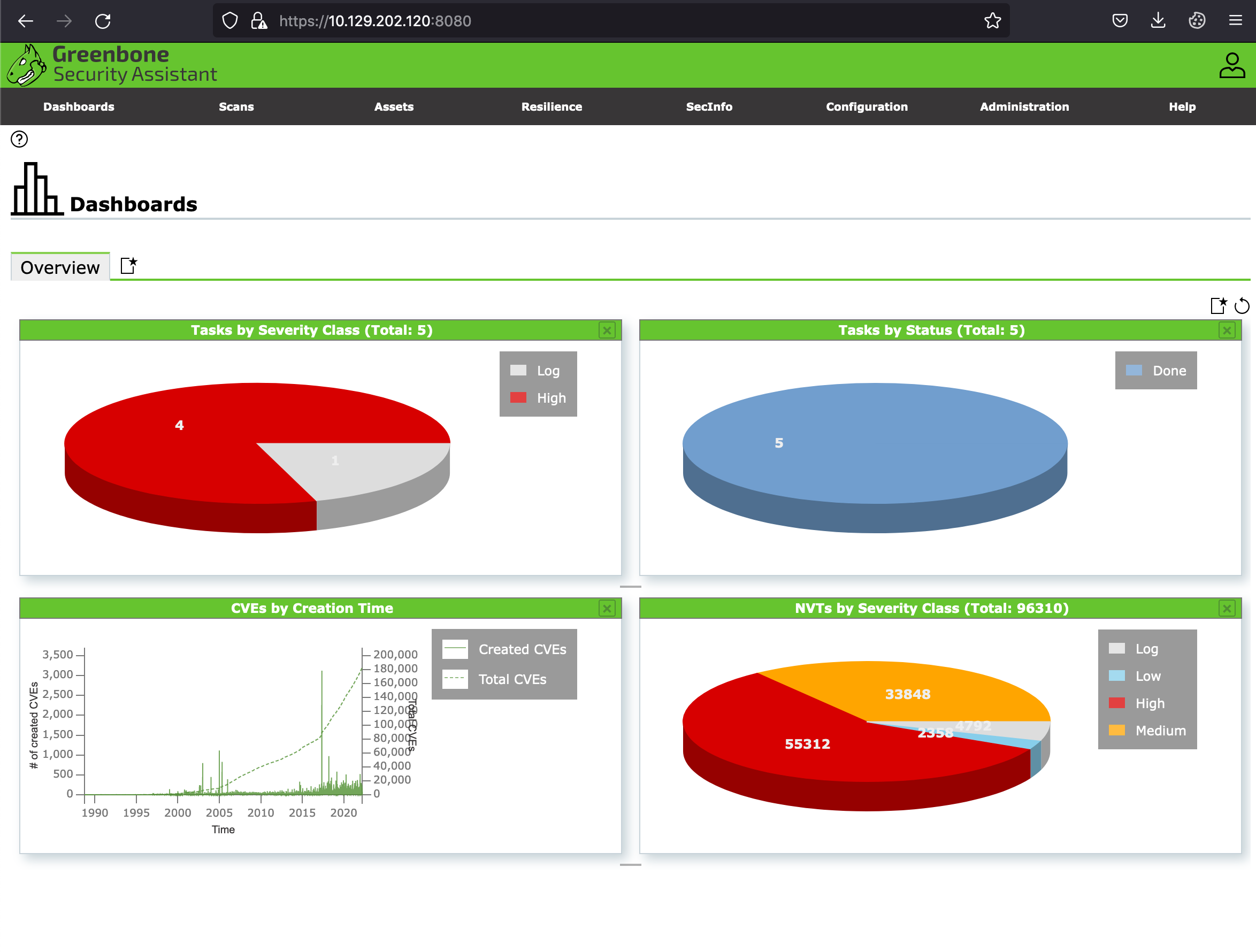

OpenVAS by Greenbone Networks is a publicly available open-source vulnerability scanner. OpenVAS can perform network scans, including authenticated and unauthenticated testing.