Before beginning any penetration testing engagement, it is essential to set up a reliable and efficient working environment. This involves organizing tools, configuring systems, and ensuring that all necessary resources are ready for use. By establishing a well-structured testing infrastructure early on, we can reduce downtime, minimize errors, and streamline the assessment process. In this module, we will explore the foundational technologies and configurations that support this goal, focusing on virtualization and setting up the proper environment for our testing activities.

Assume that our company was commissioned by a new customer (Inlanefreight) to perform an external and internal penetration test. As already mentioned, proper Operating System preparation is required before conducting any penetration test. Our customer provides us with internal systems that we should prepare before the engagement so that the penetration testing activities commence without delays. For this, we have to prepare the necessary operating systems accordingly and efficiently.

Every penetration test is different in terms of scope, expected results, and environment, depending on the customer's service line and infrastructure. Apart from the different penetration testing stages we usually go through; our activities can vary depending on the type of penetration test, which can either extend or limit our working environment and capabilities.

For example, if we are performing an internal penetration test, in most cases, we are provided with an internal host from which we can work. Suppose this host has internet access (which is usually the case). In that case, we need a corresponding Virtual Private Server (VPS) with our tools to access and download the related penetration testing resources quickly.

Testing may be performed remotely or on-site, depending on the client's preference. If remote, we will typically ship them a device with our penetration testing distro of choice pre-installed, or provide them with a custom VM that will call back to our infrastructure via OpenVPN. The client will elect to either host an image (that we must log into and customize a bit on day one) and give us SSH access (via IP whitelisting), or provide us with VPN access directly into their network. Some clients will prefer not to host any image and provide VPN access, in which case we are free to test from our own local Linux and Windows VMs.

When traveling on-site to a client, it is essential to have both a customized and fully up-to-date Linux and Windows VM. Certain tools work best (or only) on Linux, and having a Windows VM makes specific tasks (such as enumerating Active Directory) much easier and more efficient. Regardless of the setup chosen, we must guide our clients on the pros and cons and help guide them towards the best possible solution based on their network and requirements.

This is yet another area of penetration testing in which we must be versatile and adaptable as subject matter experts. We must make sure we are fully prepared on day 1 with the proper tools to provide the client with the best possible value and an in-depth assessment. Every environment is different, and we never know what we will encounter once we begin enumerating the network and uncovering issues. We will have to compile/install tools or download specific scripts to our attack VM during almost every assessment we perform. Having our tools set up in the best way possible will ensure that we don't waste time in the initial days of the assessment. Ideally, we should only have to make changes to our assessment VMs for specific scenarios we encounter during the assessment.

Over time, we all gather different experiences and collections of tools that we are most familiar with. Being structured is of paramount importance, as it increases our efficiency in penetration testing. The need to search for individual resources and their dependencies before the engagement even starts can be removed entirely by having access to a prebaked, organized, and structured environment. Doing so requires preparation and knowledge of different operating systems, which will develop with time.

Efficiency is what many people want and expect. However, many people today rely on a tremendous amount of tools, to the point where the system becomes slow and no longer works properly. This is not surprising given the large number of applications and solutions offered today. Beginners in particular are overwhelmed when every source of information has 50 different opinions. These are all relevant and depend on the individual case, which is not a bad thing.

But beginners or even experienced people often look for other solutions when their work spectrum or responsibilities in their working environment change. Then there is another difficult aspect: migrating from the old to the new.

This often requires a great deal of effort and time and still does not guarantee that this investment will reflect the value. Therefore, with this module we want to create the essential setup in which we create a working environment for ourselves that we know inside out, can configure ourselves and adapt independently.

As we have already seen in the Learning Process module, organization plays a significant role in our penetration tests. It does not matter what type of penetration test it is. Having a working environment that we can navigate almost blindly saves a tremendous amount of time researching resources that we are already familiar with and have invested our time learning. These sources can be found within a few minutes, but once we have an extensive list of resources required for each assessment and include installation, this results in a few hours of pure preparation.

Corporate environments usually consist of heterogeneous networks (hosts/servers having different Operating Systems). Therefore, it makes sense to organize hosts and servers based on their OS. If we organize our structure according to penetration testing stages and the targets’ Operating System, then a sample folder structure could look as follows.

Organization

Code: sessoin

Cry0l1t3@htb[/htb]$ tree ..

└── Penetration-Testing

│

├── Pre-Engagement

│ └── ...

├── Linux

│ ├── Information-Gathering

│ │ └── ...

│ ├── Vulnerability-Assessment

│ │ └── ...

│ ├── Exploitation

│ │ └── ...

│ ├── Post-Exploitation

│ │ └── ...

│ └── Lateral-Movement

│ └── ...

├── Windows

│ ├── Information-Gathering

│ │ └── ...

│ ├── Vulnerability-Assessment

│ │ └── ...

│ ├── Exploitation

│ │ └── ...

│ ├── Post-Exploitation

│ │ └── ...

│ └── Lateral-Movement

│ └── ...

├── Reporting

│ └── ...

└── Results

└── ...

If we are specialized in specific penetration testing fields, we can, of course, reorganize the structure according to these fields. We are all free to develop a system with which we are familiar, and in fact, it is recommended that we do so. Everyone works differently and has their strengths and weaknesses. If we work in a team, we should develop a structure that each team member is familiar with. Take this example as a starting point for creating your system.

Organization

Cry0l1t3@htb[/htb]$ tree ..

└── Penetration-Testing

│

├── Pre-Engagement

│ └── ...

├── Network-Pentesting

│ ├── Linux

│ │ ├── Information-Gathering

│ │ │ └── ...

│ │ ├── Vulnerability-Assessment

│ │ │ └── ...

│ │ └── ...

│ │ └── ...

│ ├── Windows

│ │ ├── Information-Gathering

│ │ │ └── ...

│ │ └── ...

│ └── ...

├── WebApp-Pentesting

│ └── ...

├── Social-Engineering

│ └── ...

├── .......

│ └── ...

├── Reporting

│ └── ...

└── Results

└── ...

Proper organization helps us in both keeping track of everything and finding errors in our processes. During our studies here, we will come across many different fields that we can use to expand and enhance our understanding of the cybersecurity domain. Not only can we save the cheatsheets or scripts provided within Modules in Academy, but we can also keep notes regarding all phases of a penetration test we will come across in HTB Academy to ensure that no critical steps are missed in future engagements. We recommend starting with small structures, especially when entering the penetration testing field. Organizing based on the Operating System is, therefore, more suitable for newcomers.

While organizing things for ourselves or an entire team, we should make sure that we all work according to a specific procedure. Everyone should know how where they fit in and where each member fits in throughout the entire penetration testing process. There should also be a common understanding regarding the activities of each member. Otherwise, things may end up in the wrong subdirectory, or evidence necessary for reporting could be lost or corrupted.

Numerous browser add-ons exist that can significantly enhance both our penetration testing activities and efficiency. Having to reinstall them over and over again takes time and slows us down unnecessarily. Thankfully Firefox offers add-on and bookmark synchronization after creating and using a Firefox account. All add-ons installed using this account are automatically installed and synchronized when we log in again. The same applies to any saved bookmarks. Therefore, logging in with a Firefox account will be enough to transfer everything from a prefabricated environment to a new one.

We should be cautious not to store any resources containing potentially sensitive information or private resources. We should always keep in mind that third parties could view these stored resources. Therefore, customer-related bookmarks should never be saved. A list of bookmarks should always be created with a single principle:

This list will be seen by third parties sooner or later.For this reason, we should create an account for penetration testing purposes only. If our bookmark list must be edited and extended, then the safest route is to store the list locally and import it to the pentesting account. Once we have done this, we should change our private one (the non-pentesting one).

One other essential component for us is password managers. Password managers can prove helpful not only for personal purposes but also for penetration tests. One of the most common vulnerabilities or attack methods within a network is "password reuse". We often try to use found or decrypted passwords and usernames to log in to multiple systems and services within the company network through this attack. It is still quite common to come across credentials that can be used to access several services or servers, making our work easier and offering us more opportunities for attack. There are three main problems with passwords:

ComplexityRe-usageRemembering1. Complexity

The first problem with passwords is complexity and remembering it. It is already a challenge for standard users to create a complex password, as they are often associated with content that users know and can remember. NordPass has created a list of the most commonly used passwords that we can view here. We can see here that these passwords can be guessed within seconds without any special preparations, such as password mutation.

2. Re-usage

Once the user has created and memorized a complex password, only two problems remain. Remembering a complex and hard-to-guess password is still within the realm of possibility for a standard user. The second problem is that this password is then used for all services, which allows us to work across several components of the company's infrastructure. To prevent this, the user would have to create and remember complex passwords for all services used.

3. Remembering

This brings us to the third problem with passwords. If a standard user uses several dozen services, human nature forces them to become lazy. Very few will make an effort actually to remember all passwords. If a user creates multiple passwords, the risk of forgetting them or mixing them up with other password components is high if a secure password-keeping solution is not used.

Password Managers solve all problems mentioned above not only for standard users but also for penetration testers. We work with dozens, if not hundreds, of different services and servers for which we need a new, strong, and complex password every time. Some providers include the following, but are not limited to:

| 1Password | LastPass | Keeper | Bitwarden | Proton Pass |

|---|---|---|---|---|

Another advantage to this is that we only have to remember one password to access all of our other passwords. One of the most recommended is Proton Pass, which offers a free plan and are Plus plan with an integrated 2FA, secure vault, dark web monitoring.

We should continually update the components we have organized before starting a new penetration test. This applies to the operating system we use and all the Github collections we will collect and use over time. It is highly recommended to record all the resources and their sources in a file to more easily automate them later. Any automation scripts can also be saved in Proton, Github, or your own server with your self-hosted applications to download them directly when needed.

When we create automated scripts, they are operating system-dependent. For example, we can work with Bash, Python, and PowerShell. Creating automation scripts is a good exercise for learning and practicing scripting and can also help us prepare and even reinstall a system more efficiently. We will find more tools, practical explanations, and cheat sheets when learning new methods and technologies. It is recommended that we keep those in a record and keep the entries up to date.

Note-taking is another essential part of our penetration testing because we accumulate a lot of different information, results, and ideas that are difficult to remember all at once. There are five different main types of information that need to be noted down:

1. Discovered Information

By "discovered information" we mean general information—such as new IP addresses, usernames, passwords, source code—that we identified and are related to the penetration testing engagement and process. This is information that we can use against our target company. We often obtain such information through OSINT, active scans, and manual analysis of the given information resources and services.

2. Processing

We will acquire huge amounts of information, of all different types, during our penetration testing engagements. This requires that we be able to adapt our approach. The info we obtain may give us ideas for subsequent steps to take, while other vulnerabilities or misconfigurations may be forgotten or overlooked. Therefore, we should get in the habit of noting down everything we see that should be investigated as part of the assessment. Notion.so, Anytype, Obsidian and Xmind are very suitable for this.

Notion.so is a fancy online markdown editor that offers many different functions and gives us the ability to shape our ideas and thoughts according to our preferences.

Xmind is an excellent mind map editor that can visualize relevant information components and processes very well.

Obsidian is a powerful knowledge base that works on top of a local folder of plain text Markdown files.

3. Results

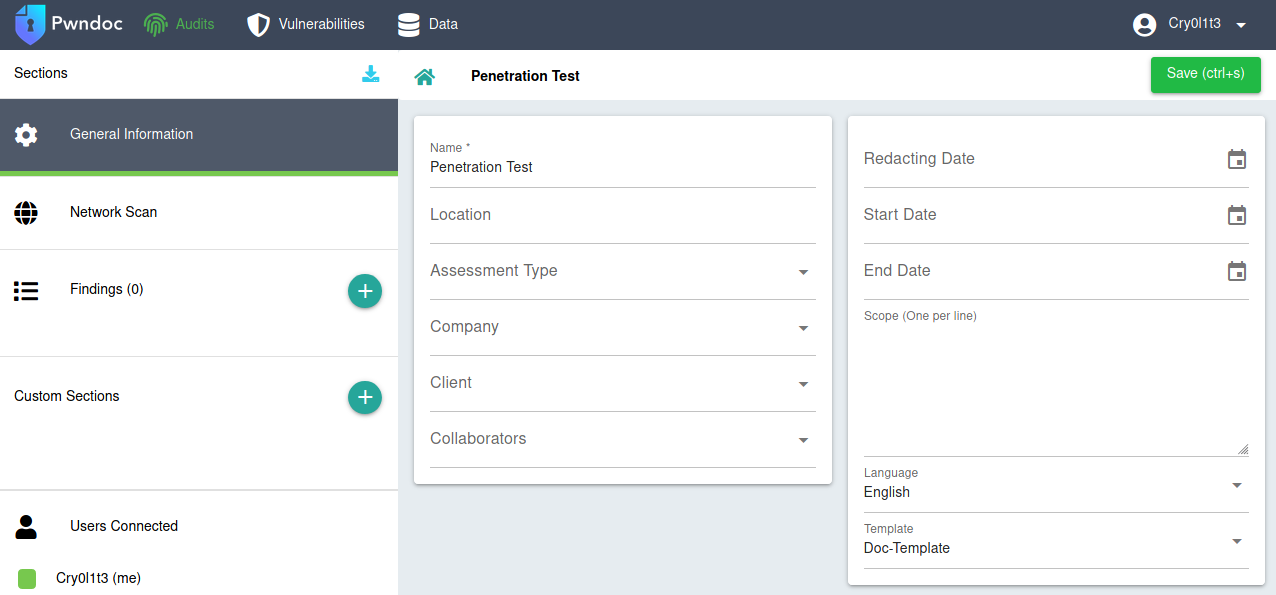

The results we get after our scans and penetration testing steps are significant. With such a large amount of information in a short time, one can quickly feel overwhelmed. It is not easy at first to filter out the most critical pieces of information. This is something that will come with experience and practice. Only through practice, our eyes can be trained to recognize the essential small fragments of information. Nevertheless, we should keep all information and results not to miss something meaningful and because a piece of information may prove helpful later in the engagement. Besides, these results are also often used for documentation. For this, we can use GhostWriter or Pwndoc. These allow us to generate our documentation and have a clear overview of the steps we have taken.

GhostWriter

Pwndoc

4. Logging

Logging is essential for both documentation and our protection. If third parties attack the company during our penetration test and damage occurs, we can prove that the damage did not result from our activities. For this, we can use the tools script and date. Date can be used to display the exact date and time of each command in our command line. With the help of script, every command and the subsequent result is saved in a background file. To display the date and time, we can replace the PS1 variable in our .bashrc file with the following content.

PS1

Code: bash

PS1="\[\033[1;32m\]\342\224\200\$([[ \$(/opt/vpnbash.sh) == *\"10.\"* ]] && echo \"[\[\033[1;34m\]\$(/opt/vpnserver.sh)\[\033[1;32m\]]\342\224\200[\[\033[1;37m\]\$(/opt/vpnbash.sh)\[\033[1;32m\]]\342\224\200\")[\[\033[1;37m\]\u\[\033[01;32m\]@\[\033[01;34m\]\h\[\033[1;32m\]]\342\224\200[\[\033[1;37m\]\w\[\033[1;32m\]]\n\[\033[1;32m\]\342\224\224\342\224\200\342\224\200\342\225\274 [\[\e[01;33m\]$(date +%D-%r)\[\e[01;32m\]]\\$ \[\e[0m\]"

Date

Organization

─[eu-academy-1]─[10.10.14.2]─[Cry0l1t3@htb]─[~]

└──╼ [03/21/21-01:45:04 PM]$

To start logging with script (for Linux) and Start-Transcript (for Windows), we can use the following command and rename it according to our needs. It is recommended to define a certain format in advance after saving the individual logs. One option is using the format <date>-<start time>-<name>.log.

Script

Organization

Cry0l1t3@htb[/htb]$ script 03-21-2021-0200pm-exploitation.log

Cry0l1t3@htb[/htb]$ <ALL THE COMMANDS>

Cry0l1t3@htb[/htb]$ exit

Start-Transcript

Organization

C:\> Start-Transcript -Path "C:\Pentesting\03-21-2021-0200pm-exploitation.log"

Transcript started, output file is C:\Pentesting\03-21-2021-0200pm-exploitation.log

C:\> ...SNIP...

C:\> Stop-Transcript

This will automatically sort our logs in the correct order, and we will no longer have to examine them manually. This also makes it more straightforward for our team members to understand what steps have been taken and when.

Another significant advantage is that we can later analyze our approach to optimize our process. If we repeat one or two steps repeatedly and use them in combination, it may be worthwhile to examine these steps with the help of a simple script to save time.

In addition, most tools offer the possibility to save the results in separate files. It is highly recommended always to use these functions because the results can also change. Therefore, if specific results seem to have changed, we can compare the current results with the previous ones. There are also terminal emulators, such as Tmux and Ghostty, which allow, among other things, to log all commands and output automatically. If we come across a tool that does not allow us to log the output, we can work with redirections and the program tee. This would look like this:

Linux Output Redirection

Organization

Cry0l1t3@htb[/htb]$ ./custom-tool.py 10.129.28.119 >> logs.custom-tool

Organization

Cry0l1t3@htb[/htb]$ ./custom-tool.py 10.129.28.119 | tee -a logs.custom-tool

Organization

C:\> .\custom-tool.ps1 10.129.28.119 > logs.custom-tool

Organization

C:\> .\custom-tool.ps1 10.129.28.119 | Out-File -Append logs.custom-tool

5. Screenshots

Screenshots serve as a momentary record and represent proof of results obtained, necessary for the Proof-Of-Concept and our documentation. One of the best tools for this is Flameshot. It has all the essential functions that we need to quickly edit our screenshots without using an additional editing program. We can install it using our APT package manager or via download from Github.

Flameshot

Sometimes, however, we cannot show all the necessary steps in one or more screenshots. We can use an application called Peek and create GIFs that record all the required actions for us.

Peek

Virtualization is an abstraction of physical computing resources. Both hardware and software components can be abstracted. A computer component created as part of virtualization is referred to as a virtual or logical component and can be used precisely as its physical counterpart. The main advantage of virtualization is the abstraction layer between the physical resource and the virtual image. This is the basis of various cloud services, which are becoming increasingly important in everyday business. Note that virtualization must be distinguished from the concepts of simulation and emulation.

By enabling physical computing resources—such as hardware, software, storage, and network components—to be represented and accessed in a virtual form, virtualization allows these resources to be distributed to different users in a flexible and demand-driven manner. This approach is intended to improve the overall utilization of computing resources. One of its key goals is to enable the execution of applications on systems that would not normally support them. In the context of virtualization, we typically distinguish between:

Hardware virtualization refers to technologies that allow hardware components to be accessed independently of their physical form through the use of hypervisor software. The best-known example of this is the virtual machine (VM). A VM is a virtual computer that behaves like a physical computer, from its hardware to the operating system. Virtual machines run as virtual guest systems on one or more physical systems referred to as hosts. VirtualBox can also be enhanced with VirtualBox Guest Additions, which are a set of drivers and system applications designed to enhance the performance and usability of guest operating systems with VirtualBox.

A virtual machine (VM) is a virtual operating system that runs on a host system (an actual physical computer system). Several VMs isolated from each other can be operated in parallel. The physical hardware resources of the host system are allocated via hypervisors. This is a sealed-off, virtualized environment with which several guest systems can be operated, independent of the operating system, in parallel, on one physical computer. The VMs act independently of each other and do not influence each other. A hypervisor manages the hardware resources, and from the virtual machine's point of view, allocated computing power, RAM, hard disk capacity, and network connections are exclusively available.

From the application perspective, an operating system installed within the VM behaves as if installed directly on the hardware. It is not apparent to the applications or the operating system that they are running in a virtual environment. Virtualization is usually associated with performance loss for the VM because the intermediate virtualization layer itself requires resources. VMs offer numerous advantages over running an operating system or application directly on a physical system. The most important benefits are:

An excellent and free alternative to VMware Workstation Pro is VirtualBox. With VirtualBox, hard disks are emulated in container files called Virtual Disk Images (VDI). Aside from VDI format, VirtualBox can also handle hard disk files from VMware virtualization products (.vmdk), the Virtual Hard Disk format (.vhd), and others. We can also convert these external formats using the VBoxManage command-line tool that is part of VirtualBox. We can install VirtualBox from the command line, or download the installation file from the official website and install it manually.

VirtualBox is very common in private use. The installation is easy and usually requires no additional configuration for it to launch. We can download VirtualBox from their homepage: https://www.virtualbox.org/

VirtualBox Download

Alternatively, with Ubuntu Linux we can use the following commands to install both VirtualBox and the extension pack simultaneously:

VirtualBox Installation

Virtualization

cry0l1t3@htb[/htb]$ sudo apt install virtualbox virtualbox-ext-pack -y

The VirtualBox extension pack enhances the overall functionality of VirtualBox with the following features:

Proxmox is an open-source, enterprise-grade server virtualization and management platform which utilizies Kernel-based Virtual Machine (KVM) for full virtualization and Linux Containers (LXC) for container-based virtualization. This software is used in businesses and large data centers.

Proxmox provides three main solutions which can be downloaded here:

Proxmox VE ISO Download

This software allows us to build and simulate entire networks, including complex setups using any type of virtual machine or container you can think of. We can download the Proxmox VE ISO file and install it on VirtualBox to experiment with it without the need for additional hardware resources.

After the download completes, we can create a new VM and assign the ISO image to it. Be sure to assign at least 4GB RAM and 2 CPUs for the Proxmox VM.

Once everything is set up, we should double check all the stats. If everything looks good, we now can start the VM.

When the VM boots up, we're greeted with the Proxmox Virtual Environment screen. Now, we can select the option to install Proxmox VE using the graphical interface.

Pay close attention throughout the duration of the setup, and make sure to read everything through the installation. Once it's installed, you will see the login screen, along with management webpage's URL.

The same credentials you used during the installation will be used to log into the web dashboard. Your credentials will be root:<your password>.

At this point, you should see all the configuration options for your virtualized “Datacenter”. This is where you can upload VMs, containers, create networks, and much more. Those VMs and containers will be inside virtualized Proxmox environment and do not need to be added independently to VirtualBox.

Linux is the most widely used operating system for penetration testing. As such, we must be proficient with it (or at the very least, familiar). When setting up an operating system for this purpose, it is best to establish a standardized configuration that consistently results in an environment we are comfortable working with.

Suppose we are asked to perform a penetration test to test both the network's internal and external security. If we have not yet prepared a dedicated system, now is the time to do so. Otherwise, we risk wasting valuable time during the engagement on setup and configuration, time that could be better spent testing various components of the network. However, before we prepare a system, we need to look at the available penetration testing distributions.

There are many different distributions we can use, all of which have different advantages and disadvantages. Many people ask themselves which system is the best. Nevertheless, what many do not understand is that it is a personal decision. This means that it depends primarily on our own needs and desires, which is the best for us. The tools available on these operating systems can be installed on pretty much any distribution, so we should instead ask ourselves what we expect from this Linux distribution. The best penetration testing distributions are characterized by their large/active community and detailed documentation. Among the most popular include, but not limited to:

ParrotOS (Pwnbox) |

Kali Linux | BlackArch | BackBox |

|---|---|---|---|

In this scenario, we will deal with ParrotOS Security as our penetration testing distribution of choice. Let's select the category Live to get a full version of the operating system.

Next, we see three different editions of ParrotOS

In this case, we will select the HTB edition. Feel free to follow along.

After that, you will see the download button and the default credentials, in case you want to use it without installation.

Before installing our ParrotOS Security operating system, we need to create a VM (in VirtualBox in this example). Here, we also specify which installation file will be used for the operating system (.iso file).

ParrotOS ISO

Since VirtualBox does not recognize every operating system by default, it may not correctly detect ParrotOS. Therefore, we need to manually specify which distribution it is based on—in this case, Debian.

We also need to assign a name for the VM with the label we want for it, and then set the path where it will be stored.

After that, we can set the maximum size of the VM. It is recommended to allocate more than 20 GB, since the VM will grow as we install packages and applications during setup and use.

OS Size

Once we have created our VM, we can start it and get to the GRUB menu to select our options. Since we want to install ParrotOS, we should select that option. Once we click on the VM window, our mouse will be trapped there, meaning that our mouse cannot leave the window. To move the mouse freely again, we have to press [Right CTRL] (the default hotkey in most cases). However, we should verify this key combination in the VirtualBox window under Preferences > Virtual Machine > Hot Key Combo.

Note: We can design all the steps according to our needs. However, we should stick to the given selection for uniformity in the steps shown to get the same result.

Once you click on Try / Install, you'll enter the live mode of ParrotOS. This mode lets you explore and test the system without installing it. When you click Install Debian, the installation process begins. We are then prompted to choose our language, location, keyboard layout, and partitioning method.

Since we want to encrypt our data and information on the VM using Logical Volume Manager (LVM), we should select the "Encrypt system" option. It is also recommended to create a Swap (no Hibernate) for our system.

LVM is a partitioning scheme. Mainly used in Unix and Linux environments, it provides a level of abstraction between disks, partitions, and file systems. Using LVM, it is possible to form dynamically changeable partitions which extend over several disks. After selecting LVM, we'll be prompted to enter a username, hostname, and password.

Once we have made all the required entries, we can confirm them and start configuring LVM.

LVM acts as an additional layer between physical storage and the operating system’s logical storage. LVM supports the organization of logical volumes into RAID arrays to protect against hard disk failure. Unlike RAID, however, the LVM concept does not provide redundancy. While primarily used in Linux and Unix, similar features exist in Windows (Storage Spaces) and macOS (CoreStorage)

Once we get to the partitioning step, we will be asked for an encryption passphrase. We should keep in mind that this passphrase should be very strong and must be stored securely, ideally with a password manager. We will then have to re-enter the passphrase to confirm that no mistakes were made.

LVM Passphrase

After setting the passphrase, we will get an overview of all the partitions that have been created and configured. Other options will also be made available to us, as shown above. If no further configurations are needed, we can finish partitioning and apply the changes.

The operating system will now begin installing, and when it completes, the virtual machine will automatically restart. Upon reboot, we’ll be prompted to enter the encryption passphrase we created earlier to unlock the encrypted system and proceed with booting.

LVM Unlock Partition

If we have entered the passphrase correctly, then the operating system will boot up completely, and we will be able to log in. Here we enter the password for the username we have created.

First Login

Now that we have installed the operating system, we need to bring it up to date. For this, we will use the APT package management tool. The advanced packaging toolkit (APT) is a package management system that originated in the Debian operating system (which uses dpkg for the management of .deb packages under the hood). Package managers are used to search, update, and install program packages and dependencies. APT uses repositories (thus package sources), which are deposited in the directory /etc/apt/sources.list (in our case for ParrotOS: /etc/apt.sources.list.d/parrot.list).

ParrotOS Sources List

Linux

┌─[cry0l1t3@parrot]─[~]

└──╼ $ cat /etc/apt/sources.list.d/parrot.list | grep -v "#"

deb https://deb.parrot.sh/parrot lory main contrib non-free non-free-firmware

deb https://deb.parrot.sh/direct/parrot lory-security main contrib non-free non-free-firmware

deb https://deb.parrot.sh/parrot lory-backports main contrib non-free non-free-firmware

Here, the package manager can access a list of HTTP and FTP servers, which it then uses to obtain the packages for installation. If packages are searched for, they are automatically loaded from the list of available repositories. Since program versions can be compared quickly and loaded automatically from the repositories list, updating existing program packages under APT is relatively easy and comfortable.

Updating ParrotOS

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ sudo apt update -y && sudo apt full-upgrade -y && sudo apt autoremove -y && sudo apt autoclean -y

[sudo] password for cry0l1t3: **********************

Hit:1 https://deb.parrot.sh/parrot rolling InRelease

Hit:2 https://deb.parrot.sh/parrot rolling-security InRelease

Reading package lists... Done

Building dependency tree

Reading state information... Done

2310 packages can be upgraded. Run 'apt list --upgradable' to see them.

Reading package lists... Done

Building dependency tree

Reading state information... Done

Calculating upgrade... Done

The following packages were automatically installed and are no longer required:

cryptsetup-nuke-password dwarfdump

<SNIP>

Below, we have a list of common pentesting tools:

Tools List

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ cat tools.list

netcat

ncat

nmap

wireshark

tcpdump

hashcat

ffuf

gobuster

hydra

zaproxy

proxychains

sqlmap

radare2

metasploit-framework

python2.7

python3

spiderfoot

theharvester

remmina

xfreerdp

rdesktop

crackmapexec

exiftool

curl

seclists

testssl.sh

git

vim

tmux

Most of the packages are already installed on the operating system. If there are only a few packages that we want to install, we can enter them manually with following command.

Installing Additional Tools

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ sudo apt install netcat ncat nmap wireshark tcpdump ...SNIP... git vim tmux -y

[sudo] password for cry0l1t3:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libarmadillo9 libboost-locale1.71.0 libcfitsio8 libdap25 libgdal27 libgfapi0

<SNIP>

However, if you're working with a longer list of tools, it's more efficient to create a tool list file and use it to automate installation. This ensures consistency and saves time during future setups:

Installing Additional Tools from a List

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ sudo apt install $(cat tools.list | tr "\n" " ") -y

[sudo] password for cry0l1t3:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libarmadillo9 libboost-locale1.71.0 libcfitsio8 libdap25 libgdal27 libgfapi0

<SNIP>

We will likely come across tools that aren't found in the standard repositories, and therefore will need to download them manually from Github. For example, assume we are missing some tools for Privilege Escalation and want to download the Privilege-Escalation-Awesome-Scripts-Suite. We would use the command:

Clone Github Repository

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ git clone https://github.com/carlospolop/privilege-escalation-awesome-scripts-suite.git

Cloning into 'privilege-escalation-awesome-scripts-suite'...

remote: Enumerating objects: 29, done.

remote: Counting objects: 100% (29/29), done.

remote: Compressing objects: 100% (17/17), done.

remote: Total 5242 (delta 18), reused 22 (delta 11), pack-reused 5213

Receiving objects: 100% (5242/5242), 18.65 MiB | 5.11 MiB/s, done.

Resolving deltas: 100% (3129/3129), done.

List Contents

Linux

┌─[cry0l1t3@parrotos]─[~]

└──╼ $ ls -l privilege-escalation-awesome-scripts-suite/

total 16

-rwxrwxr-x 1 cry0l1t3 cry0l1t3 1069 Mar 23 16:41 LICENSE

drwxrwxr-x 3 cry0l1t3 cry0l1t3 4096 Mar 23 16:41 linPEAS

-rwxrwxr-x 1 cry0l1t3 cry0l1t3 2506 Mar 23 16:41 README.md

drwxrwxr-x 4 cry0l1t3 cry0l1t3 4096 Mar 23 16:41 winPEAS

After installing the relevant packages and repositories, it is highly recommended to take a VM snapshot. In the following steps, we will be making changes to specific configuration files. If we aren't careful, a human error could make parts of the system (or even the entire system) unusable. We definitely do not want to have to repeat all our previous steps., So let's now create a snapshot and name it "Initial Setup".

Nevertheless, before we create this snapshot, we should first shut down the virtual machine. Doing so not only speeds up the snapshot process but also ensures the snapshot is taken in a clean, powered-off state, reducing the chances of system corruption or inconsistencies.

If any mistakes are made while performing further configurations or testing, we can simply restore the snapshot and continue from a working state. It's good practice to take a snapshot after every major configuration change, and even periodically during a penetration test, to avoid losing valuable progress.

Create a Snapshot

Completed Tasks

In addition to LVM encryption, we can encrypt the entire VM with another strong password. This gives us an extra layer of protection that will protect our results and any customer data residing on the system. This means that no one will be able to start the VM without the password we set.

Now that we have shut down and powered off the VM, we go to Edit virtual machine settings and select the Options tab.

VirtualBox Disk Encryption Settings

There we will find more additional functions and settings that we can use later. Relevant for us now is the Access Control settings. Once we have encrypted it, we will not be able to create a clone of it without first decrypting it. More about this can be found in the VirtualBox documentation.

Windows computers serve an essential role in most companies, making them one of the main targets for aspiring penetration testers like ourselves. However, they can also serve as effective penetration testing platforms. There are several advantages to using Windows as our daily driver. It blends into most enterprise environments, making us appear both physically and virtually less suspicious. Additionally, navigating and communicating with other hosts on an Active Directory domain is easier when using Windows compared to Linux and some Python tooling. Traversing SMB and utilizing shares is also more straightforward in this context. With this in mind, it can be beneficial to familiarize ourselves with Windows and establish a standard configuration that ensures a stable and effective platform for conducting our activities.

Building our penetration testing platform can help us in multiple ways:

With all this in mind, where do we start? Fortunately for us, there are many new features with Windows that weren't available years ago. Windows Subsystem for Linux (WSL) is an excellent example of this. It allows for Linux operating systems to run alongside our Windows install. This can help us by giving us a space to run tools developed for Linux right inside our Windows host, without the need for a hypervisor program or installation of a third-party application such as VirtualBox or Docker.

This section will examine and cover the installalation of the core components needed to get our systems in fighting shape, such as WSL, Visual Studio Code, Python, Git, and the Chocolatey Package Manager. Since we are utilizing this platform for its penetration testing functions, it will also require us to make changes to our host's security settings. Keep in mind, most exploitation tools and code are just that, USED for EXPLOITATION, and can be harmful to your host if not careful. Be mindful of what you install and run. If we do not isolate these tools off, Windows Defender will almost certainly delete any detected files and applications it deems harmful, breaking our setup. Now, let's dive in.

The installation of the Windows VM is done in the same way as the Linux VM. We can do this on a bare-metal host or in a hypervisor. With either option, we have certain requirements to meet and things to consider when installing Windows 10.

Hardware Requirements

Ideally, we want to have a moderate processor that can handle intensive loads at times. If we are attempting to run Windows virtualized, our host will need at least four cores so that two can be given to the VM. Windows can get a bit beefy with updates and tool installs, so 80G of storage or more is ideal. When it comes to RAM, 4G would be a minimum to ensure we do not have any latency or issues while performing our penetration tests.

Software Requirements

Unlike most Linux distributions, Windows is a licensed product. To stay in good standing, we ought to ensure that we adhere to the terms of use. For now, a great place to start is to grab a copy of a Developer VM, were available here. We can use this to begin building out our platform. The Developer Evaluation platform comes pre-configured with:

| Windows 10 Version 2004 |

| Windows 10 SDK Version 2004 |

| Visual Studio 2019 with the UWP, .NET desktop, and Azure workflows enabled and also includes the Windows Template Studio extension |

| Visual Studio Code |

| Windows Subsystem for Linux with Ubuntu installed |

| Developer mode enabled |

The VM comes pre-configured with a user: IEUser and Password Passw0rd!. It is a trial virtual machine, so it has an expiration date of 90 days. Keep this in mind when configuring it. Once you have a baseline VM, take a snapshot.

To prepare our Windows host, we have to make a few changes before moving onto the fun stuff (e.g., installing our pentesting tools):

To keep with our command-line use, we will make an conscious effort to utilize the command-line whenever possible. To start installing updates on our host, we will need the PSWindowsUpdate module. To acquire it, we will open an administrator Powershell window and issue the following commands:

Updates

Windows

PS C:\htb> Get-ExecutionPolicy -List

Scope ExecutionPolicy

----- ---------------

MachinePolicy Undefined

UserPolicy Undefined

Process Undefined

CurrentUser Undefined

LocalMachine Undefined

We must first check our systems Execution Policy to ensure we can download, load, and run modules and scripts. The above command will output a list showing the policy set for each scope. In our case, we do not want this change to be permanent, so we will only change the ExecutionPolicy for the scope of Process.

Execution Policy

Windows

PS C:\htb> Set-ExecutionPolicy Unrestricted -Scope Process

Execution Policy Change

The execution policy helps protect you from scripts that you do not trust.

Changing the execution policy might expose you to the security risks described in the about_Execution_Policies help topic at https:/go.microsoft.com/fwlink/?LinkID=135170. Do you want to change the execution policy?

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is "N"): A

PS C:\htb> Get-ExecutionPolicy -List

Scope ExecutionPolicy

----- ---------------

MachinePolicy Undefined

UserPolicy Undefined

Process Unrestricted

CurrentUser Undefined

LocalMachine Undefined

Once we set our ExecutionPolicy, we should recheck and make sure our change took effect. By changing the Process scope policy, we ensure that our change is temporary and only applies to the current Powershell process. Changing it for any other scope will modify a registry setting and persist until we change it back again..

Now that we have our ExecutionPolicy set, let us install the PSWindowsUpdate module and apply our updates. We can do so by:

PSWindowsUpdate

Windows

PS C:\htb> Install-Module PSWindowsUpdate

Untrusted repository

You are installing the modules from an untrusted repository. If you trust this repository,

change its InstallationPolicy value by running the Set-PSRepository cmdlet.

Are you sure you want to install the modules from 'PSGallery'?

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is "N"): A

Once the module installation completes, we can import it and run our updates.

Windows

PS C:\htb> Import-Module PSWindowsUpdate

PS C:\htb> Install-WindowsUpdate -AcceptAll

X ComputerName Result KB Size Title

- ------------ ------ -- ---- -----

1 DESKTOP-3... Accepted KB2267602 510MB Security Intelligence Update for Microsoft Defender Antivirus - KB2267602...

1 DESKTOP-3... Accepted 17MB VMware, Inc. - Display - 8.17.2.14

2 DESKTOP-3... Downloaded KB2267602 510MB Security Intelligence Update for Microsoft Defender Antivirus - KB2267602...

2 DESKTOP-3... Downloaded 17MB VMware, Inc. - Display - 8.17.2.14 3 DESKTOP-3... Installed KB2267602 510MB Security Intelligence Update for Microsoft Defender Antivirus - KB2267602... 3 DESKTOP-3... Installed 17MB VMware, Inc. - Display - 8.17.2.14

PS C:\htb> Restart-Computer -Force

The above Powershell example will import the PSWindowsUpdate module, run the update installer, and then reboot the PC to apply changes. We should make a point to run updates regularly, especially if we plan to use this host frequently and not destroy it at the end of each engagement. Now that we have our updates installed, let us get our package manager and other core tools.

Chocolatey is a free and open software package management solution that can manage the installation and dependencies of our software packages and scripts. It also allows for automation with Powershell, Ansible, and several other management solutions. Chocolatey will enable us to install the tools we need from one source, rather than downloading and installing each tool individually from the internet. Follow the Powershell commands below to learn how to install Chocolatey and use it to gather/install our tools.

Chocolatey

Windows

PS C:\htb> Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))

Forcing web requests to allow TLS v1.2 (Required for requests to Chocolatey.org)

Getting latest version of the Chocolatey package for download.

Not using proxy.

Getting Chocolatey from https://community.chocolatey.org/api/v2/package/chocolatey/0.10.15.

Downloading https://community.chocolatey.org/api/v2/package/chocolatey/0.10.15 to C:\Users\DEMONS~1\AppData\Local\Temp\chocolatey\chocoInstall\chocolatey.zip

Not using proxy.

Extracting C:\Users\DEMONS~1\AppData\Local\Temp\chocolatey\chocoInstall\chocolatey.zip to C:\Users\DEMONS~1\AppData\Local\Temp\chocolatey\chocoInstall

Installing Chocolatey on the local machine

Creating ChocolateyInstall as an environment variable (targeting 'Machine')

Setting ChocolateyInstall to 'C:\ProgramData\chocolatey'

...SNIP...

Chocolatey (choco.exe) is now ready.

You can call choco from the command-line or PowerShell by typing choco.

Run choco /? for a list of functions.

You may need to shut down and restart powershell and/or consoles

first prior to using choco.

Ensuring Chocolatey commands are on the path

Ensuring chocolatey.nupkg is in the lib folder

We have now installed chocolatey. The Powershell command we issued sets our ExecutionPolicy for the session, then downloads the installer from chocolatey.org and runs the script. Next, we will update chocolatey and start installing packages. To ensure no issues arise, it is recommended that we periodically restart our host.

Windows

PS C:\htb> choco upgrade chocolatey -y

Chocolatey v0.10.15

Upgrading the following packages:

chocolatey

By upgrading, you accept licenses for the packages.

chocolatey v0.10.15 is the latest version available based on your source(s).

Chocolatey upgraded 0/1 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

Now that we've confirmed that chocolatey is up-to-date, let's manage our packages. To install packages with choco, we can issue a command such as choco install pkg1 pkg2 pkg3, listing out the package you need one by one separated by spaces. Alternatively, we can use the packages.config file for the installation. This is an XML file that chocolatey can reference to install a list of packages. Another helpful command to use is choco info pkg. It will show us various information about the package, if it is available in the choco repository. See the install page for more info on how to utilize chocolatey.

Windows

PS C:\htb> choco info vscode

Chocolatey v0.10.15

vscode 1.55.1 [Approved]

Title: Visual Studio Code | Published: 4/9/2021

Package approved as a trusted package on Apr 09 2021 01:34:23.

Package testing status: Passing on Apr 09 2021 00:49:32.

Number of Downloads: 1999367 | Downloads for this version: 19751

Package url

Chocolatey Package Source: https://github.com/chocolatey-community/chocolatey-coreteampackages/tree/master/automatic/vscode

Package Checksum: 'fTzzpEG+cspu7FUdqMbj8EqaD8cRIQ/cXtAUv7JGVB9uc23vuGNiuceqM94irt+nx8MGM0xAcBwdwBH+iE+tgQ==' (SHA512)

Tags: microsoft visualstudiocode vscode development editor ide javascript typescript admin foss cross-platform

Software Site: https://code.visualstudio.com/

Software License: https://code.visualstudio.com/License

Software Source: https://github.com/Microsoft/vscode

Documentation: https://code.visualstudio.com/docs

Issues: https://github.com/Microsoft/vscode/issues

Summary: Visual Studio Code

Description: Build and debug modern web and cloud applications. Code is free and available on your favorite platform - Linux, Mac OSX, and Windows.

...SNIP...

Above is an example of using the info option with chocolatey.

Windows

PS C:\htb> choco install python vscode git wsl2 openssh openvpn

Chocolatey v0.10.15

Installing the following packages:

python;vscode;git;wsl2;openssh;openvpn

...SNIP...

Chocolatey installed 20/20 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

Installed:

- kb2919355 v1.0.20160915

- python v3.9.4

- kb3033929 v1.0.5

- chocolatey-core.extension v1.3.5.1

- kb2999226 v1.0.20181019

- python3 v3.9.4

- openssh v8.0.0.1

- vcredist2015 v14.0.24215.20170201

- gpg4win-vanilla v2.3.4.20191021

- vscode.install v1.55.1

- wsl2 v2.0.0.20210122

- kb2919442 v1.0.20160915

- openvpn v2.4.7

- git.install v2.31.1

- vscode v1.55.1

- vcredist140 v14.28.29913

- kb3035131 v1.0.3

- dotnet4.5.2 v4.5.2.20140902

- git v2.31.1

- chocolatey-windowsupdate.extension v1.0.4

PS C:\htb> RefreshEnv

We can see in the terminal above that choco installed the packages we requested and pulled the required dependencies. Issuing the RefreshEnv command will update Powershell, along with any environment variables that were applied. At this point, we have the bulk of the core tools installed which enable our operations. To install other packages, the choco install pkg command is sufficient, pulling any additional operational tools we need. We have included a list of helpful packages that can aid us in completing a penetration test below. See the automation section further down to begin automating installing the tools and packages we commonly need and use.

Windows Terminal is Microsoft's updated release for a GUI terminal emulator. It supports the use of many different command-line tools, including Command Prompt, PowerShell, and Windows Subsystem for Linux. The terminal allows for the use of customizable themes, configurations, command-line arguments, and custom actions. It is a versatile tool for managing multiple shell types and will quickly become a staple for most.

To install Terminal with Chocolatey:

Windows

PS C:\htb> choco install microsoft-windows-terminal

Chocolatey v0.10.15

2 validations performed. 1 success(es), 1 warning(s), and 0 error(s).

Validation Warnings:

- A pending system reboot request has been detected, however, this is

being ignored due to the current Chocolatey configuration. If you

want to halt when this occurs, then either set the global feature

using:

choco feature enable -name=exitOnRebootDetected

or pass the option --exit-when-reboot-detected.

Installing the following packages:

microsoft-windows-terminal

By installing you accept licenses for the packages.

Progress: Downloading microsoft-windows-terminal 1.6.10571.0... 100%

microsoft-windows-terminal v1.6.10571.0 [Approved]

microsoft-windows-terminal package files install completed. Performing other installation steps.

Progress: 100% - Processing The install of microsoft-windows-terminal was successful.

Software install location not explicitly set, could be in package or

default install location if installer.

Chocolatey installed 1/1 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

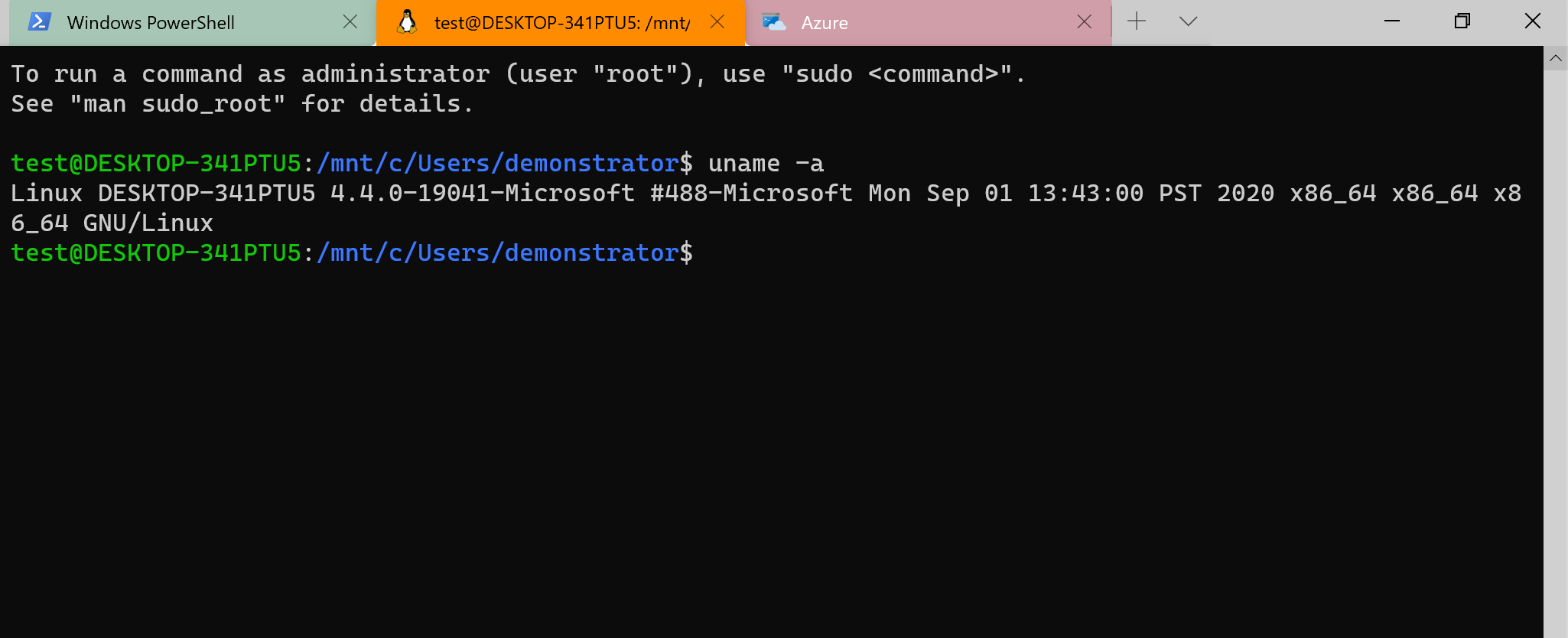

Windows Subsystem for Linux 2 (WSL2) is the second iteration of Microsoft's architecture that allows users to run Linux instances, providing the ability to run Bash scripts and other apps like Vim, Python, and more. WSL also allows us to interact with the Windows operating system and file structure from a Unix instance. Best of all, it’s all done without the use of a hypervisor like VirtualBox or Hyper-V.

What does this mean for us? Having the ability to interact with and utilize Linux native tools/applications from our Windows host provides us with a hybrid environment, and all the flexibility that comes with it. To install the subsystem, the quickest route is to utilize chocolatey.

Chocolatey - WSL2

Windows

PS C:\htb> choco install WSL2

Chocolatey v0.10.15

2 validations performed. 1 success(es), 1 warning(s), and 0 error(s).

Installing the following packages:

wsl2

By installing you accept licenses for the packages.

Progress: Downloading wsl2 2.0.0.20210122... 100%

wsl2 v2.0.0.20210122 [Approved]

wsl2 package files install completed. Performing other installation steps.

...SNIP...

wsl2 may be able to be automatically uninstalled.

The install of wsl2 was successful.

Software installed as 'msi', install location is likely default.

Chocolatey installed 1/1 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

Once WSL is installed, we can add the Linux platform of our choice. The most common one to find is Ubuntu on the Microsoft store. Current Linux distributions supported for WSL are but not limited to:

To install the distribution of our choice, just click the link above, and it will take us to the Microsoft Store page for the distro. Once we have it installed, we need to open a PowerShell prompt and type bash.

Moving forward, we can use it as a regular OS alongside our Windows install.

Since we will be using this platform as a penetration testing host, we may run into issues with Windows Defender finding our tools unsavory. Windows Defender will scan, quarantine, or remove anything it deems potentially harmful. To make sure Defender does not mess up our plans, we will add some exclusion rules to ensure our tools stay in place.

Windows Defender Exemptions for the Tools' Folders.

C:\Users\your user here\AppData\Local\Temp\chocolatey\C:\Users\your user here\Documents\git-repos\C:\Users\your user here\Documents\scripts\These three folders are just a start. As we add more tools and scripts, we may need to add more exclusions. To exclude these files, we will run the following PowerShell command.

Adding Exclusions

Windows

PS C:\htb> Add-MpPreference -ExclusionPath "C:\Users\your user here\AppData\Local\Temp\chocolatey\"

We can repeat the same steps for each folder we wish to exclude.

Chocolatey for package management is an obvious choice for automating the initial install of core tools and applications. Combined with PowerShell, it's possible to design a script that pulls everything for us in one run. Here is an example script to install some of our requirements. As usual, before executing any scripts, we need to change the execution policy. Once we have our initial script built, we can modify it as our toolkit changes and reuse it to speed up our setup process.

Choco Build Script

Code: powershell

# Choco build script

write-host "*** Initial app install for core tools and packages. ***"

write-host "*** Configuring chocolatey ***"

choco feature enable -n allowGlobalConfirmation

write-host "*** Beginning install, go grab a coffee. ***"

choco upgrade wsl2 python git vscode openssh openvpn netcat nmap wireshark burp-suite-free-edition heidisql sysinternals putty golang neo4j-community openjdk

write-host "*** Build complete, restoring GlobalConfirmation policy. ***"

choco feature disable -n allowGlobalConfirmation

When scripting with Chocolatey, the developers recommend several rules for us to follow:

choco or choco.exe as the command in your scripts. cup or cinst tends to misbehave when used in a script.n, it is recommended that we use the extended option like -name.-force in scripts. It overrides Chocolatey's behavior.Not all of our packages can be acquired from Chocolatey. Fortunately for us, the majority of what is left resides in Github. We can set up a script for these as well, downloading the repositories and binaries we need and extracting them to our scripts folder. Below, we will build out a quick example of a Git script. First, let us see what it looks like to clone a repository to our local host.

Git Clone

Windows

PS C:\htb> git clone https://github.com/dafthack/DomainPasswordSpray.git

Cloning into 'DomainPasswordSpray'...

remote: Enumerating objects: 149, done.

remote: Counting objects: 100% (6/6), done.

remote: Compressing objects: 100% (5/5), done.

Receiving objects: 94% (141/149)(delta 1), reused 5 (delta 1), pack-reused 143

Receiving objects: 100% (149/149), 51.70 KiB | 3.69 MiB/s, done.

Resolving deltas: 100% (52/52), done.

PS C:\Users\demonstrator\Documents\scripts> ls

Directory: C:\Users\demonstrator\Documents\scripts

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 4/16/2021 4:58 PM DomainPasswordSpray

PS C:\Users\demonstrator\Documents\scripts> cd .\DomainPasswordSpray\

PS C:\Users\demonstrator\Documents\scripts\DomainPasswordSpray> ls

Directory: C:\Users\demonstrator\Documents\scripts\DomainPasswordSpray

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a---- 4/16/2021 4:58 PM 19419 DomainPasswordSpray.ps1

-a---- 4/16/2021 4:58 PM 1086 LICENSE

-a---- 4/16/2021 4:58 PM 2678 README.md

We issued the git clone command with the URL to the repository we needed. From the output, we can tell it created a new folder in our scripts folder then populated it with the files from the GitHub repository.

It is standard practice to prepare ones penetration testing VMs against the most common operating systems and their patch levels. This is especially necessary if we want to mirror our target machines and test our exploits before applying them to real machines. For example, we can install a Windows 10 VM that is built on different patches and releases. This will save us considerable time in the course of our penetration tests, as we will likely not need to configure them again. These VMs will help us refine our approach and our exploits, and help us better understand how an interconnected system might react—because it may be that we only get one attempt to execute the exploit.

The good thing here is that we do not have to set up 20 VMs for this but can work with snapshots. For example, we can start with Windows 10 version 1607 (OS build 14393) and update our system step by step and create a snapshot of the clean system from each of these updates and patches. Updates and patches can be downloaded from the Microsoft Update Catalog. We just need to use the Kb article designation, and there we will find the appropriate files to download and patch our systems.

Tools that can be used to install older versions of Windows:

Backups and recovery mechanisms are one of the most important safeguards against data loss, system compromise and business interruption.

When we simulate cyberattacks, we look at backups and recovery systems through a dual lens: as protective measures that can mitigate the damage and as high priority targets that attackers could exploit. To understand the importance of these systems, we need to examine how they perform under stress, what role they play in incident response, and in what real-world cases they have improved or degraded an organization's security. The best way to understand this is to develop our own backup and (disaster) recovery strategies.

For example, in a ransomware attack on Colonial Pipeline in 2021, the attackers encrypted key systems and demanded millions in ransom. The company's ability to quickly restore operations depended on robust backups that were isolated from the attacked network.

Recovery processes are equally important as they determine how quickly a company can return to normality after an intrusion. As penetration testers, we can simulate scenarios where systems are locked down or data is exfiltrated, and assess the speed and accuracy of recovery if the events are detected at all (yes, unfortunately this still happens). A poor recovery process can exacerbate the damage, as seen with Equifax in 2017, where a poor incident response exacerbated the exposure of 147 million people's data.

Let’s take a look at a few solution that we can use to properly backup your system and restore it if necessary.

Pika Backup is a user-friendly backup solution with a GUI which allows us to create backups locally and remotely. A big advantage is that it doesn’t copy files it already copied. It copies only new files or files that have been modified since the last backup. Additionally it supports encryption which adds another layer of security and its easy to set up.

Backups and Recovery

# Update

cry0l1t3@hbt[/htb]$ sudo apt update -y

# Install Pika Backup

cry0l1t3@hbt[/htb]$ sudo apt install flatpak -y

cry0l1t3@hbt[/htb]$ flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

cry0l1t3@hbt[/htb]$ flatpak install flathub org.gnome.World.PikaBackup

# Run Pika Backup

cry0l1t3@hbt[/htb]$ flatpak run org.gnome.World.PikaBackup

Once we have started Pika Backup we can setup the backup process and configure it the way we need.

Pika Backup uses BorgBackup repositories, which are directories containing (encrypted and) deduplicated archives. Deduplicated archives is a type of data archive where redundant copies can be identified and removed to reduce storage space and improve efficiency. Each data in an archive is split into a smaller chunk and based on its content it is assigned to a unique hash. If a chunk’s hash matches an existing one it will be discarded. In production environments where the host can be access through the internet (e.g. web server) it is recommended to follow the 3-2-1 rule:

We can specify the location where the local repository can be created. In this example, we will use a connected HDD with a directory called backup.

Once the directory for the repository is specified, we can setup the encryption phrase that Pika Backup uses in order to encrypt all our archives. The same way as BorgBackup, Pika Backup employs AES-256-CTR encryption which provides high security.

After the encryption has been set, we can move on and initiate the first backup or dive deeper into the configuration—exploring things such as the schedule, included, and excluded files and directories. In this example, we’re going to backup just the home directory as you can see in the Files to Back up section and exclude all Caches.

For the archives we can specify an archive index of our choice. We also can run the Data Integrity Check manually which checks the latest backup archives with the current status and makes updates if any files has been modified since the last backup.

In the Schedule tab we can specify how often a backup needs to be created. The recommended schedule is to do a backup daily for 2 weeks period.

Once everything is set up, we can launch the backup process and wait until its finished.

Duplicati is a cross-platform backup solution also with strong capabilities. It also supports AES-256 for encryption, with optional asymmetric encryption using GPG (RSA or similar for key exchange). Data is encrypted client-side and it supports strong password-based key derivation. A great advantage for many people is the number of remote destinations Duplicati supports:

The installation process is also very simple but instead of using a GUI, Duplicati is uses a webserver for management. Let’s download the installation package and install it.

Backups and Recovery

cry0l1t3@hbt[/htb]$ cd ~/Downloads

cry0l1t3@hbt[/htb]$ sudo apt install ./duplicati-2.1.0.5_stable_2025-03-04-linux-x64-gui.deb

cry0l1t3@hbt[/htb]$ duplicati

Once installed, we can navigate to http://localhost:8200 and will see a webpage similar to this:

In the Add backup tab we can start to specify our general backup settings, give it a name, set encryption and the passphrase for it.

In this example, we’ll configure Duplicati to make backups and send them to a remote server. One of the most recommended and most secure methods is the file transfer through SSH/SFTP by using SSH keys. Therefore, we need to generate a separate SSH key by using the ssh-keygen command.

Backups and Recovery

cry0l1t3@hbt[/htb]$ ssh-keygen -t ed25519

Generating public/private ed25519 key pair.

Enter file in which to save the key (/home/cry0l1t3/.ssh/id_ed25519): duplicati

Enter passphrase (empty for no passphrase): ******************

Enter same passphrase again: ******************

Your identification has been saved in duplicati

Your public key has been saved in duplicati.pub

The key fingerprint is:

SHA256:2mKNI0ZOfVMuwkFenV4NtUv0hwiHTir0gGYfR/Lhm8Q cry0l1t3@ubuntu

The key's randomart image is:

+--[ED25519 256]--+

| .o.+..oo+o |

| +o+*..=o.o.+ |

| o oo=E=... + o|

| oooo=o . ..|

| o +.S . . |

| + B o |

| + * o |

| . o o |

| |

+----[SHA256]-----+

Once the SSH key has been created we now can specify the storage type, remote IP addres and port, path on the server, username and in Advanced options we can add the authentication method ssh-key.

After that we can select the source data that needs to be backed up. A huge advantage that Duplicati provides is the filter functionality using regular expressions.

As the last step we can configure the schedule for the backups.

It is highly recommended that your backups (especially when they are stored on a remote server) are encrypted at rest and in transit. This means that your data should be stored in repositories and during the transfer. Both Pika Backup and Duplicati support this functionality and therefore help organizations to fullfil compliance regulations like GDPR and HIPPA.

We also recommend to simulate a Disaster Recovery situation where your server all of the sudden doesn’t work properly anymore or data has been lost and you need to recover the last state of it from scratch. While going through this process you will learn what kind of things can happen during recovery and if your process works as expected. Keep in mind that you should keep notes and document the process step-by-step so you have a continuity plan in place that you can use to restore your data. We also recommend to simulate this situation twice a year or once a quarter to ensure that the process you have set up works as expected. Because when things brake, your continuity plan is your last to restore everything you have built.

A Virtual Private Server (VPS) is an isolated environment created on a physical server using virtualization technology. A VPS is fundamentally part of Infrastructure-as-a-Service (IaaS) solutions. This solution offers all the advantages of an ordinary server, with specially allocated resources and complete management freedom. We can freely choose the operating system and applications we want to use, with no configuration restrictions. This VM uses the resources of a physical server and provides users with various server functionalities comparable to those of a dedicated server. It is therefore also referred to as a Virtual Dedicated Server (VDS).

A VPS positions itself as a compromise between inexpensive shared hosting and the usually costly rental of dedicated server technology. This hosting model's idea is to offer users the most comprehensive possible range of functions at manageable prices. The virtual replication of individual computer systems on a standard host system involves significantly less effort for a web hoster than the provision of separate hardware components for each customer. Extensive independence of the individual guest systems is achieved through encapsulation. Each VPS on the shared hardware base acts isolated from other, parallel operating systems. In most cases, VPS is used for the following purposes, but not limited to:

| Webserver | LAMP/XAMPP stack | Mail server | DNS server |

| Development server | Proxy server | Test server | Code repository |

| Pentesting | VPN | Gaming server | C2 server |

We can choose from a range of Windows and Linux operating systems to provide the required operating environment for our desired application or software during installation. Windows servers can cost twice as much as Linux servers on some providers. You should keep this in mind when selecting a provider.

| Provider | Price | RAM | CPU Cores | Storage | Transfer/Bandwidth |

| Linode | $12/mo | 2GB | 1 CPU | 50 GB | 2 TB |

| DigitalOcean | $10/mo | 2GB | 1 CPU | 50 GB | 2 TB |

| OneHostCloud | $14.99/mo | 2GB | 1 CPU | 50 GB | 2 TB |

Whether we are on an internal or external penetration test, a VPS that we can use is of great importance to us in many different cases. We can store all our resources on it and access them from almost any point with internet access. Apart from the fact that we first have to set up the VPS, we also have to prepare the corresponding folder structure and its services. In this example, we will deal with the provider called Linode and set up our VPS there. For the other providers, the configuration options look almost identical.

First, we need to select the location closest to us. This will ensure an excellent connection to our server. If we need to perform a penetration test on another continent, we can also set up a VPS there using our automation scripts and perform the penetration test from there. We should still read the individual information about their components carefully and understand what kind of VPS we will be working with in the future. In Linode, we can choose one of the four different servers. For our purposes, the Shared CPU server is sufficient for now. For the Linux Distribution, we select which operating system should be installed on the VPS. ParrotOS is based on Debian, just like Ubuntu. Here we can choose one of these two or go to advanced options and upload our ISO.

Linode’s Marketplace offers many different applications that have been preconfigured and can be installed right away.

Next, we need to choose the performance level for our VPS. This is one of the points where the cost can change a lot. For our purposes, however, we can choose one of the first two options on the top. However, here we need to select the performance for which we want to use the VPS. If the VPS is to be used for advanced purposes and provides many requests and services, 1024MB memory will not be enough. Therefore, it is always advisable to first set up the installation of our OS locally in a VM and then check the services' load.

After that, in the Security section we need to specify root's password and we also can add SSH keys, which we can use to log in to the VPS via SSH later. We can also generate these keys later on our VPS or our VM or own host operating system. Let's use our VM to generate the key pair. Let’s generate a new SSH key and add it to our VPS.

Generate SSH Keys

VPS Setup

┌─[cry0l1t3@parrot]─[~]

└──╼ $ ssh-keygen -t ed25519 -f vps-ssh

Generating public/private rsa key pair.

Enter passphrase (empty for no passphrase): ******************

Enter same passphrase again: ******************

Your identification has been saved in vps-ssh

Your public key has been saved in vps-ssh.pub

The key fingerprint is:

SHA256:zXyVAWK00000000000000000000VS4a/f0000+ag cry0l1t3@parrot

The key's randomart image is:

<SNIP>

With the command shown above, we generate two different keys. The vps-ssh is the private key and must not be shared anywhere or with anyone. The second vps-ssh.pub is the public key which we can now insert in the Linode control panel.

SSH Keys

VPS Setup

┌─[cry0l1t3@parrot]─[~]

└──╼ $ ls -l vps*-rw------- 1 cry0l1t3 cry0l1t3 3434 Mar 30 12:23 vps-ssh

-rw-r--r-- 1 cry0l1t3 cry0l1t3 741 Mar 30 12:23 vps-ssh.pub

┌─[cry0l1t3@parrot]─[~]

└──╼ $ cat vps-ssh.pub

Once everything is set up, we can click on Create Linode and wait until it finished the installation. After the VPS is ready, we can access it via SSH.

SSH Using Password

VPS Setup

cry0l1t3@htb[/htb]$ ssh root@<vps-ip-address>

root@VPS's password:

[root@VPS ~]#

After that, we should add a new user for the VPS to not run our services with root or administrator privileges. For this, we can then generate another SSH key and insert it for this user.

Adding a New Sudo User

VPS Setup

[root@VPS ~]# adduser cry0l1t3

[root@VPS ~]# usermod -aG sudo cry0l1t3

[root@VPS ~]# su - cry0l1t3

Password: ********

[cry0l1t3@VPS ~]$

Adding Public SSH Key to VPS

VPS Setup

[cry0l1t3@VPS ~]$ mkdir ~/.ssh

[cry0l1t3@VPS ~]$ echo '<vps-ssh.pub>' > ~/.ssh/authorized_keys

[cry0l1t3@VPS ~]$ chmod 600 ~/.ssh/authorized_keys

Once we have added this to the authorized_keys file, we can use the private key to log in to the system via SSH.

Using SSH Keys

VPS Setup

Cry0l1t3@htb[/htb]$ ssh cry0l1t3@<vps-ip-address> -i vps-ssh

[cry0l1t3@VPS ~]$

When it comes to server management, one of the most critical aspects (besides security) is to keep the server fast, reliable, and ready to handle the tasks it was build for. It is therefore highly recommended to keep the server up-to-date and set it up in such way that it has enough resources to handle everything needed. Another important aspect is the access to the server. Instead of setting up all the services we want to use, and then focusing on restricting the ways the server can be accessed—we will do it the other way around. Otherwise, certain access points to our own server might be overlooked, making ourselves vulnerable. Therefore, when we start building a remote server, first we need to ensure the server has only 1-way of access using secure protocol for communication. The best approach is to ensure that at the beginning the server can be accessed only through SSH using a authentication key and not a password.

Hardening SSH access is one important aspect. Another one is that we also need to focus on is key management. When we have to manage just a few servers, that might be pretty easy and straightforward. When your responsibility rises to manage a few hundred servers however, there is a high need to manage them efficiently. In such a case, organizations and businesses use a combination of Teleport and Ansible.

When configuring your server, it is recommended to keep the Zero Trust principle in mind - No one and nothing - whether inside or outside a network - can be trusted automatically. Think of it like a high-security building. Even if you're an employee, you need to show your ID and pass a checkpoint every time you enter, and you only get access to the rooms you need.